One of the way to provide connectivity from AWS to AVX is via IPSEC tunnels. There are however few limitations in regard to that. Throughput is the biggest concern there. We can of course increase number connections and it serves its purpose well, but lets do it a little bit differently.

We will leverage different type of TGW attachment (CONNECT). In order to do that we need to have our Aviatrix TrGW deployed in AWS and build underlay for our GRE tunnels. This underlay is actually another attachment type (well known – VPC ATTACHMENT).

Important note:

‘You can create up to 4 Transit Gateway Connect peers per Connect attachment (up to 20 Gbps in total bandwidth per Connect attachment), as long as the underlying transport (VPC or AWS Direct Connect) attachment supports the required bandwidth”

There is also another factor when thinking about such a high bandwidth – Aviatrix TrGW CPU – instance size basically.

Why connecting AWS TGW with Aviatrix TrGW that way? Why not using just Aviatrix spoke GWs? When for example dealing with brownfield and already struggling with lots of VPC connected to AWS TGW. Lets treat it as PHASE 1 of our GOAL – MCNA.

Below are all steps needed to achieve that with some details:

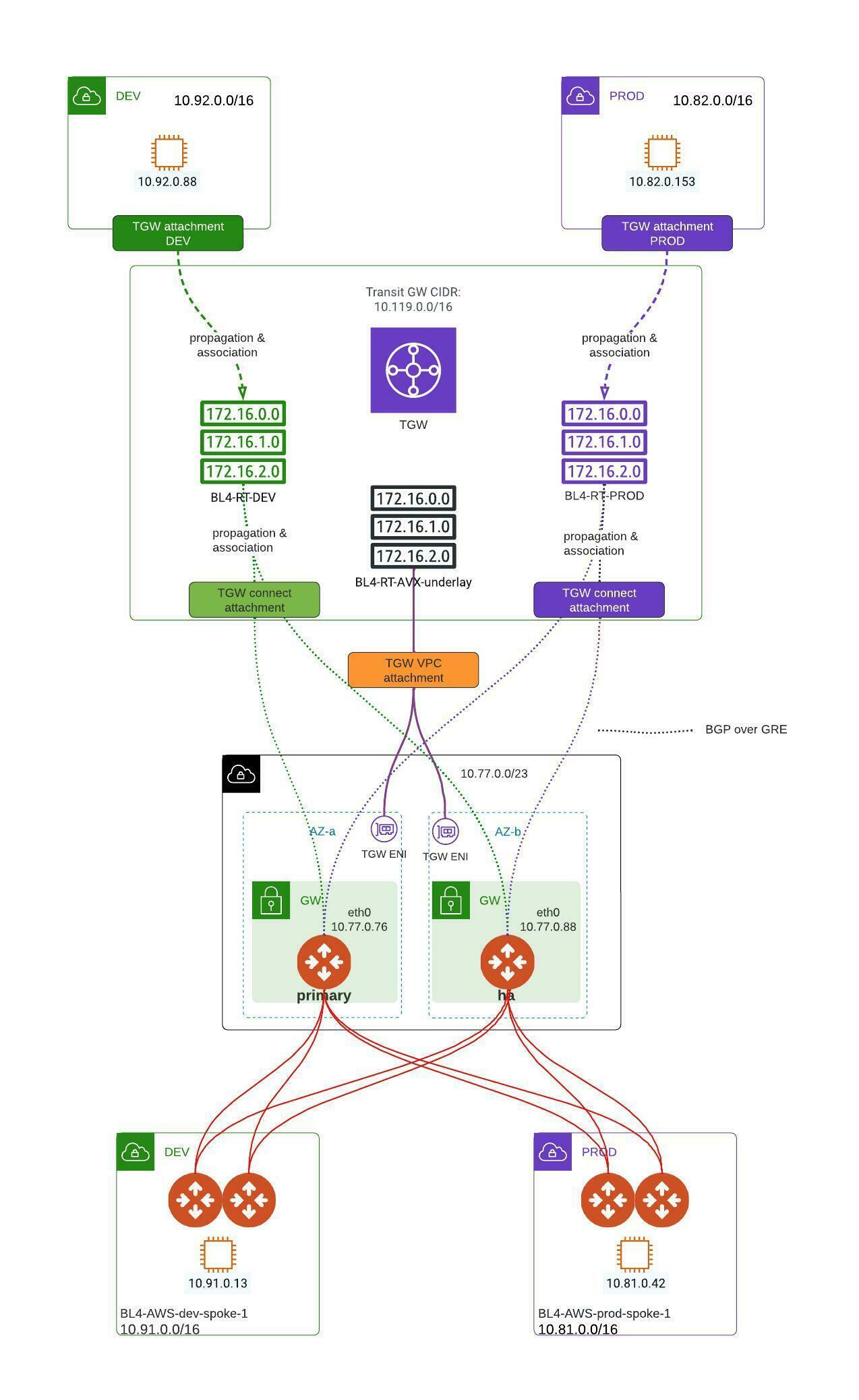

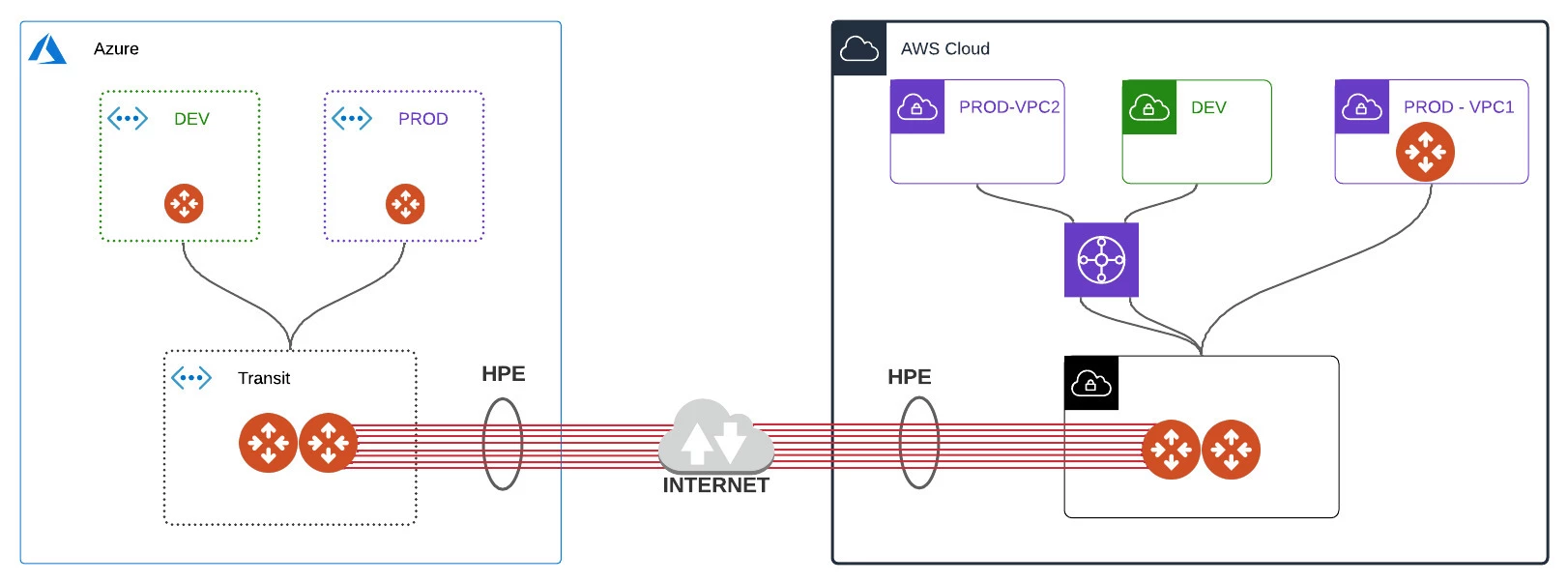

Overview:

STEP 0 - Prerequisites

- DEV VPC and PROD VPC already created and attached to AWS TGW. Routing for 10.0.0.0/8 pointing to TGW attachment.

- AWS TGW has 3 routing tables creates

BL4-RT-DEV

BL4-RT-PROD

BL4-RT-AVX-underlay - AVIATRIX Gateways are already deployed

- ASN assigned to Aviatrix TrGW – 65101

- ASN on TGW – default 64512

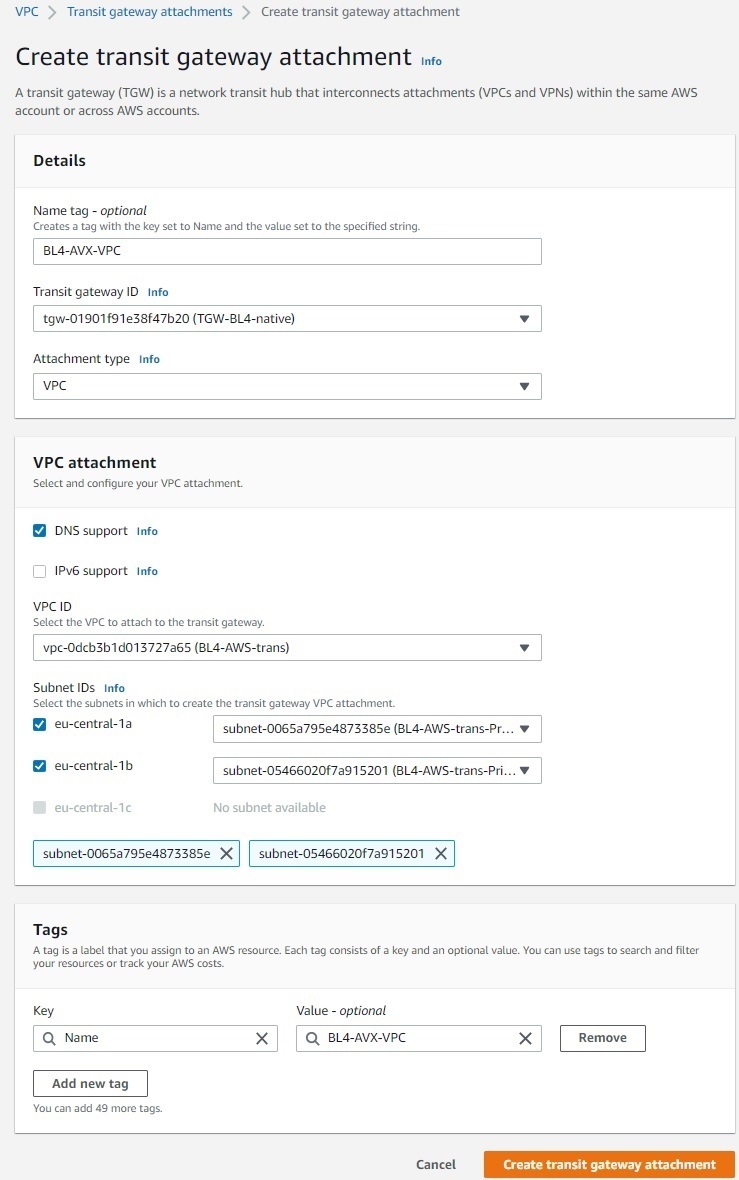

STEP 1 - AWS - create VPC attachment

To provide GRE communication we need to create VPC attachment. We can utilize default routing table (RTB) on TGW or as I did, create a designated one (“BL4-RT-AVX-underlay”). Maybe something obvious to AWS engineers but this kind of attachment creates ENI in individual subnets which we can utilize to point our traffic to.

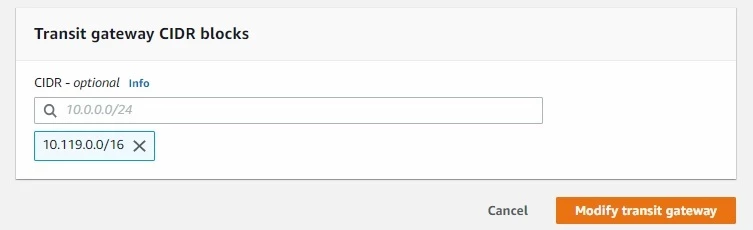

STEP 2 - AWS - assign CIDR block to TGW

We need to allocate IP CIDR to TGW which then can be used to terminate GRE tunnels. In our case it’s 10.119.0.0/16.

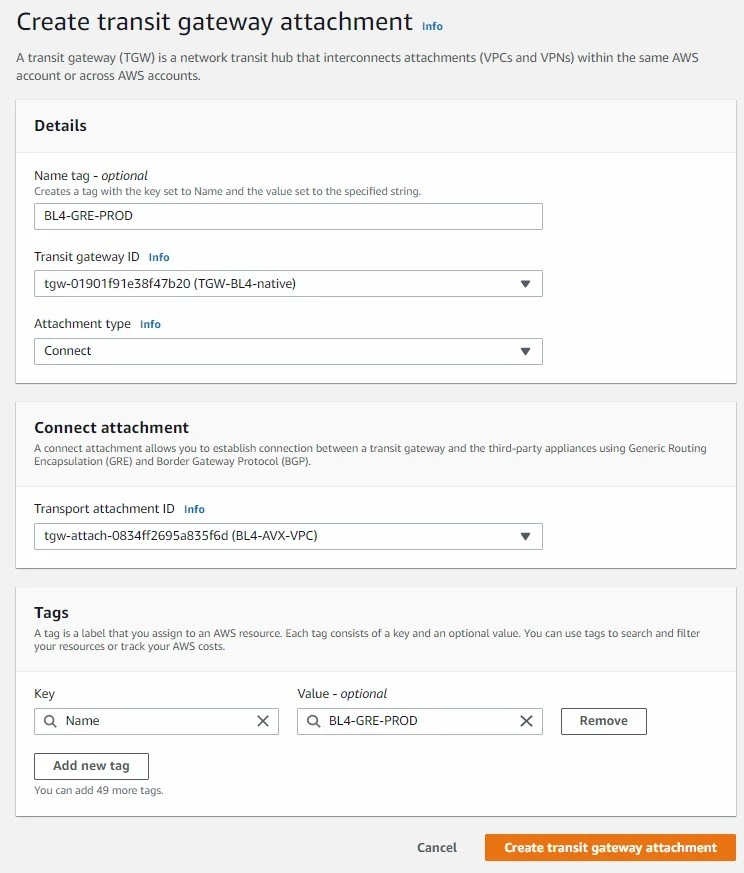

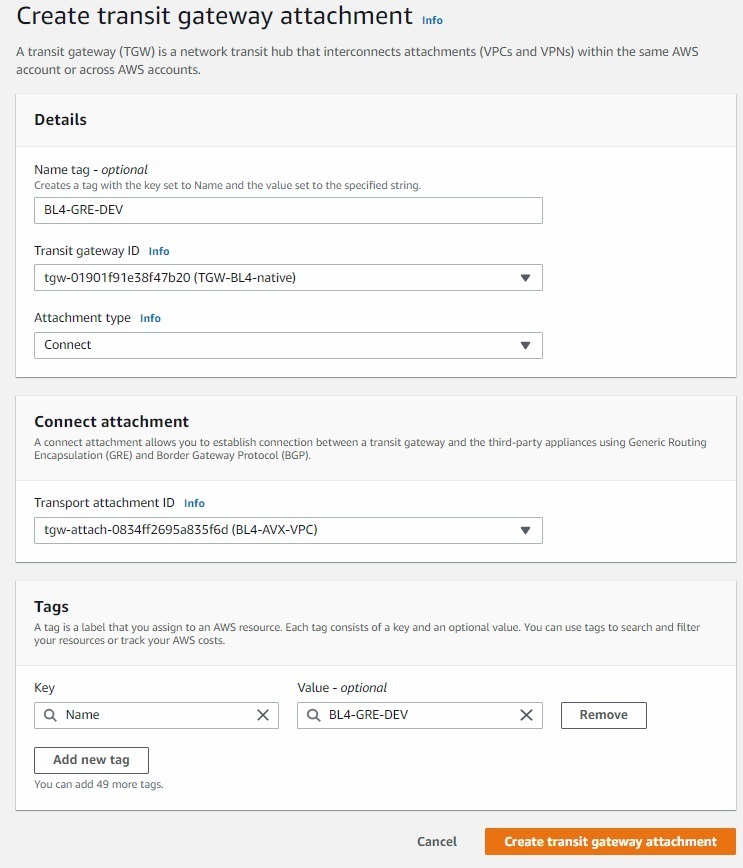

STEP 3 - AWS - create “CONNECT” attachments (x2)

On top of our VPC attachment we will create GRE tunnels. In order to do that we need to create Connect Attachments. One for PROD and one for DEV environment.

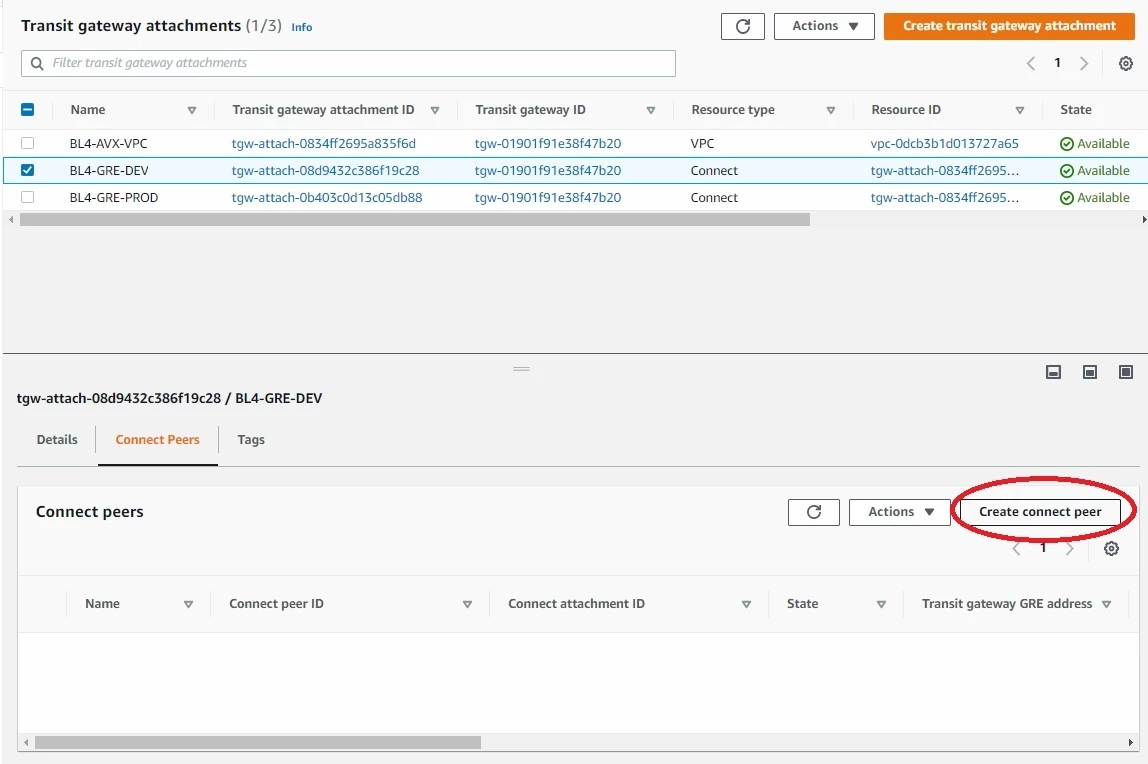

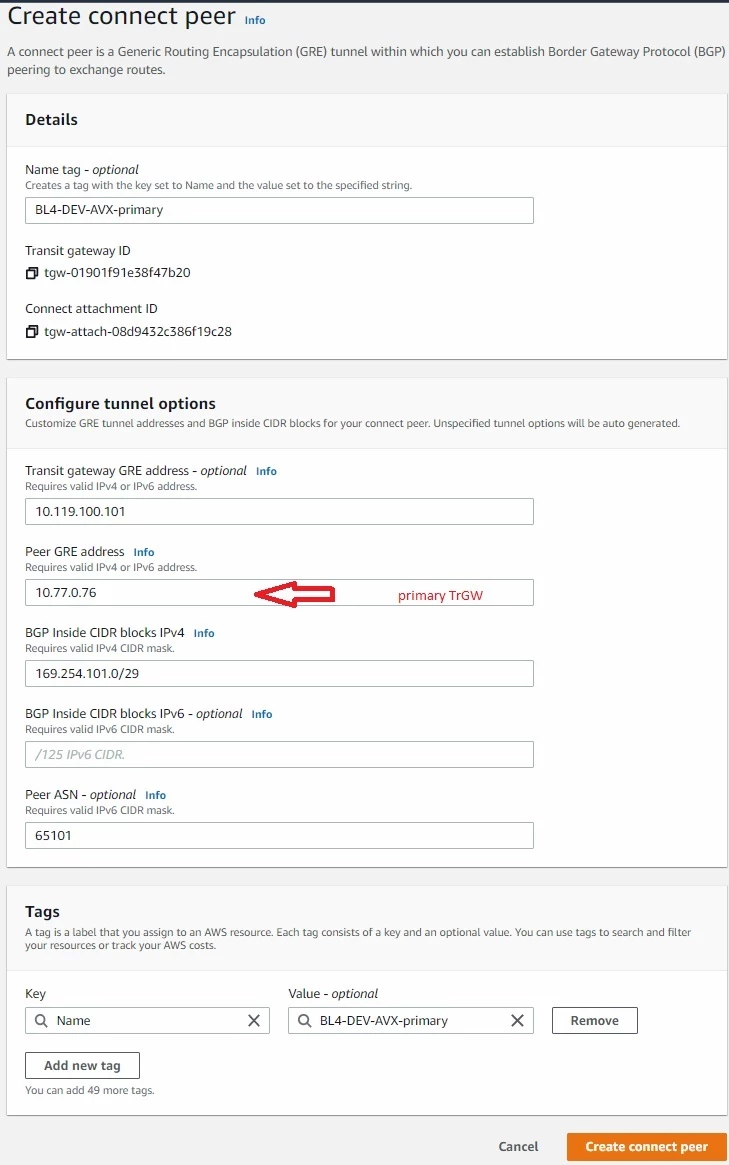

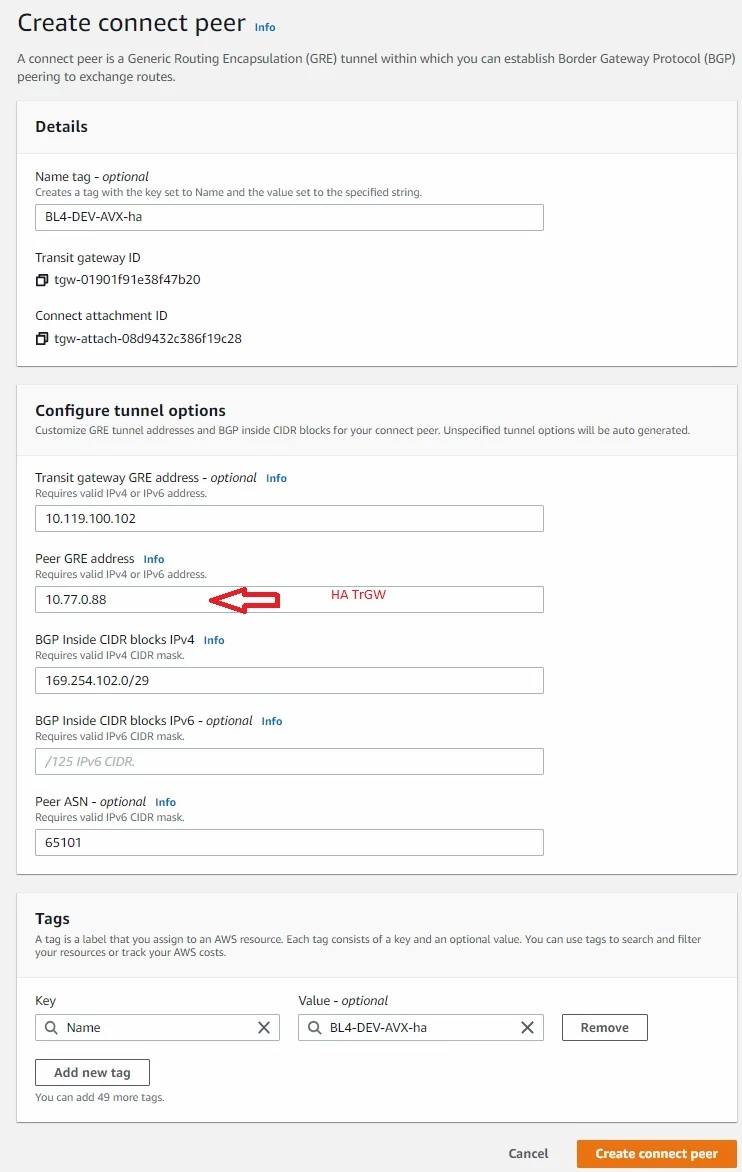

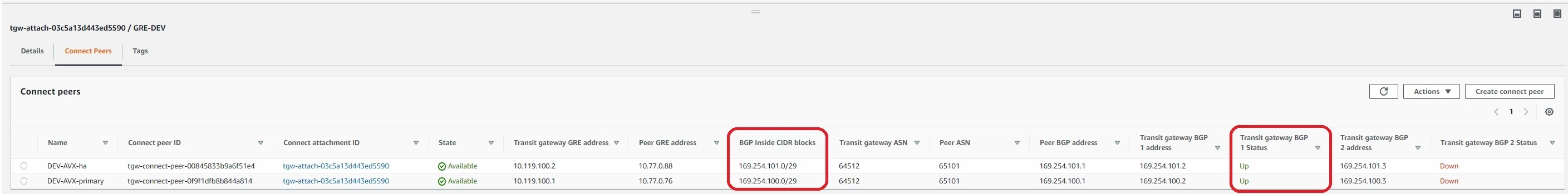

STEP 4 - AWS - Define connect peers for both of attachments

For each one of attachment (type of Connect – DEV and PROD) we need to create 2 peers (GRE endpoints). First for Primary TrGW and second for HA TrGW. For example looking into DEV environment we do that as following:

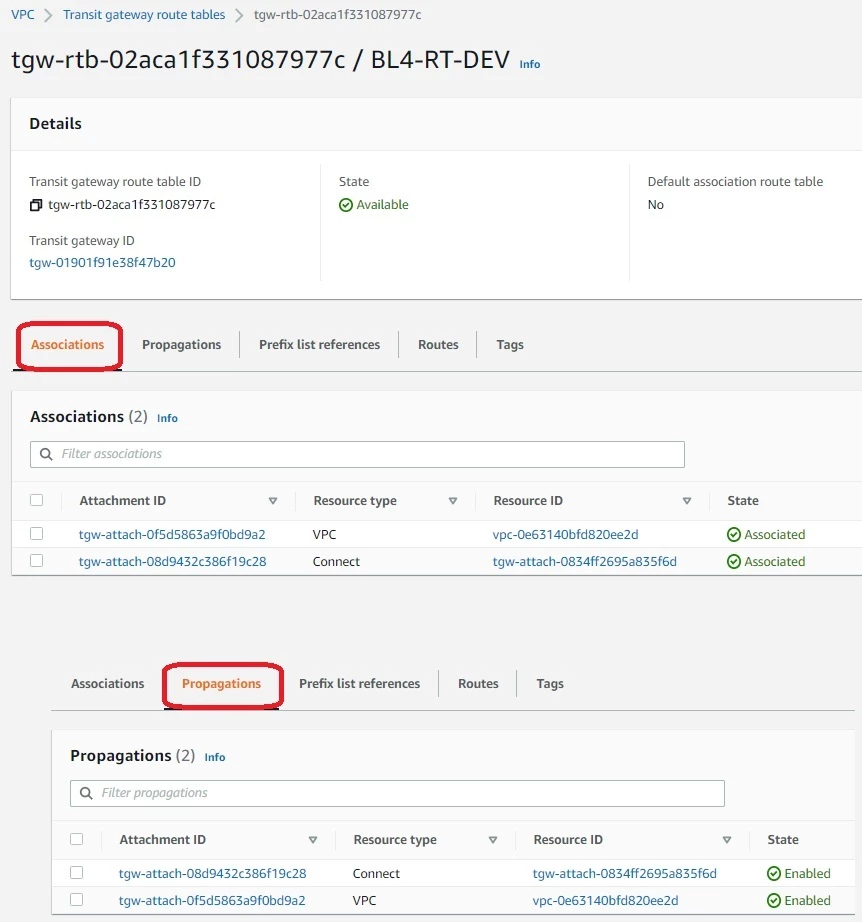

STEP 5 - AWS - route table association / propagation

We have our dedicated routing tables for PROD, DEV, underlay. Now we need to make routing tables are populated. We can do that statically or by propagation.

PROPAGATION

it injects / installs CIDR seen behind attachment into this specific RTB.

ASSOCIATION

association on the other hand allows traffic to be forwarded to individual attachment (as destination). Old networking analogy would be assigning network interface to VRF.

Key thing to remember is that you can associate Attachment with only 1 RTB but you can propagate Attachment’s CIDR into many routing tables. Taking BL4-RT-DEV as an example we have the following:

That VPC attached there is the one were workloads sits – not underlay Aviatrix attachment.

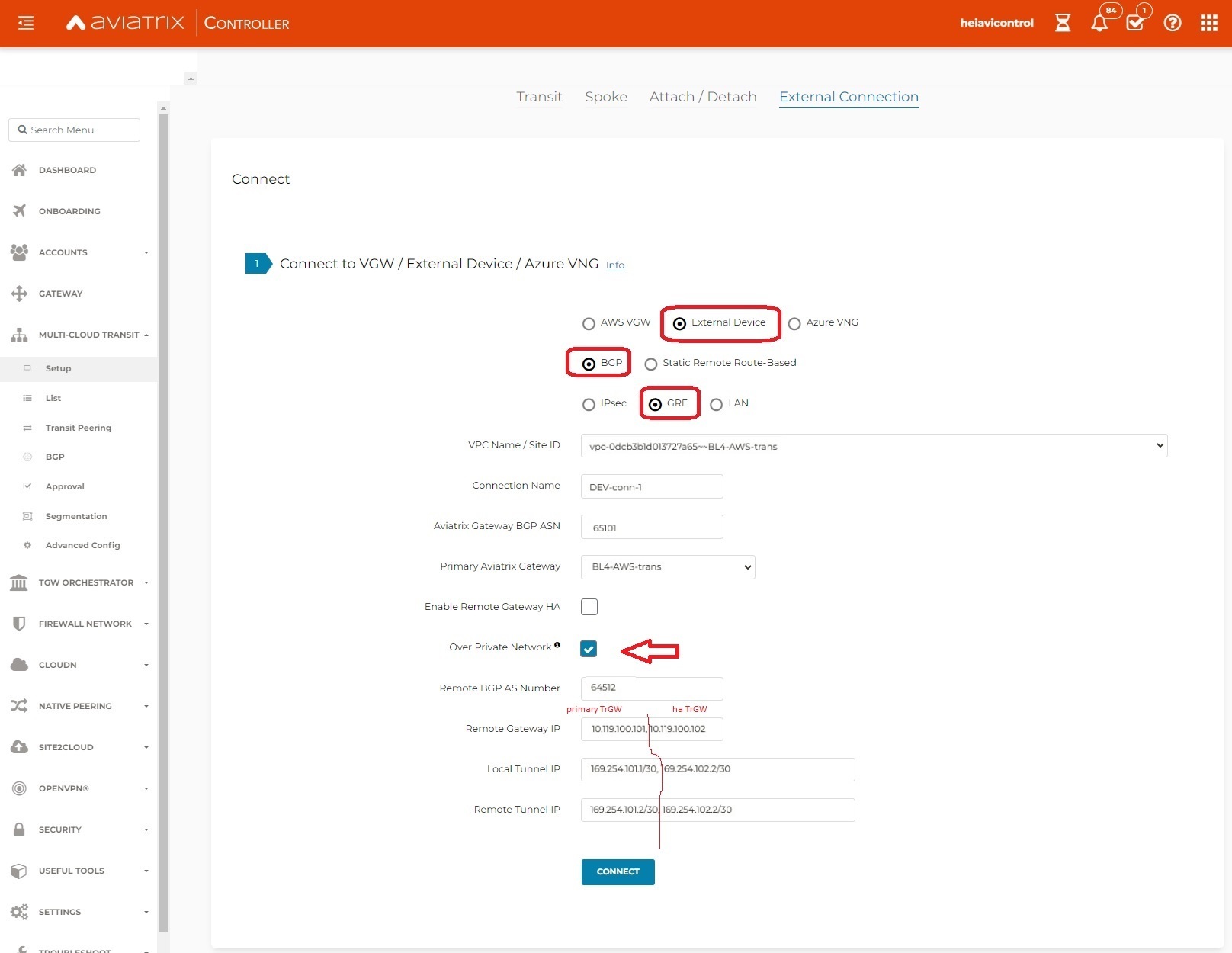

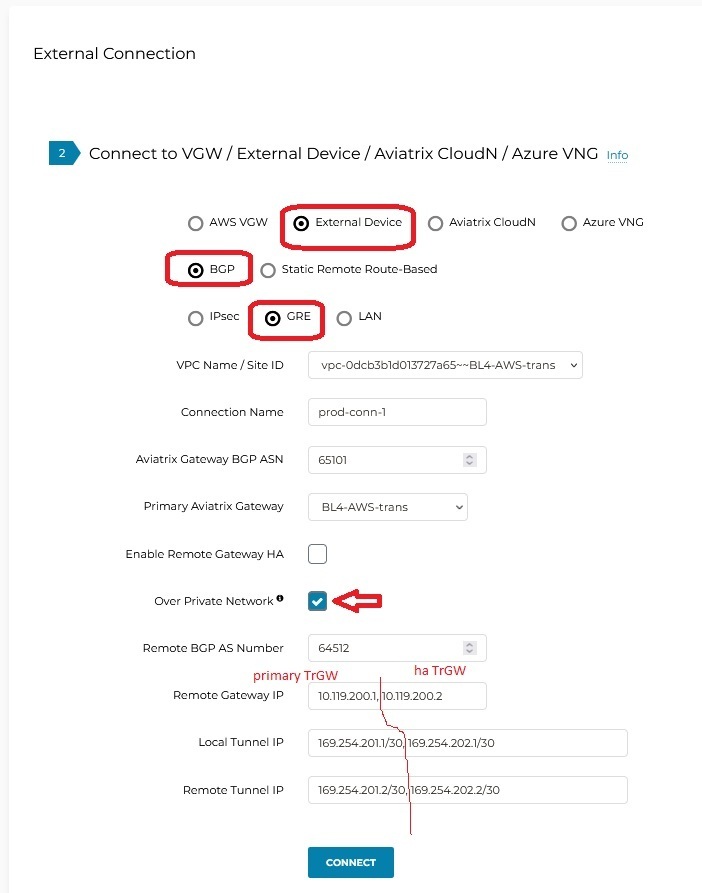

STEP 6 - AVIATRIX - Create S2C connection

Lets create our connection on Aviatrix TrGW.

Repeat it for PROD

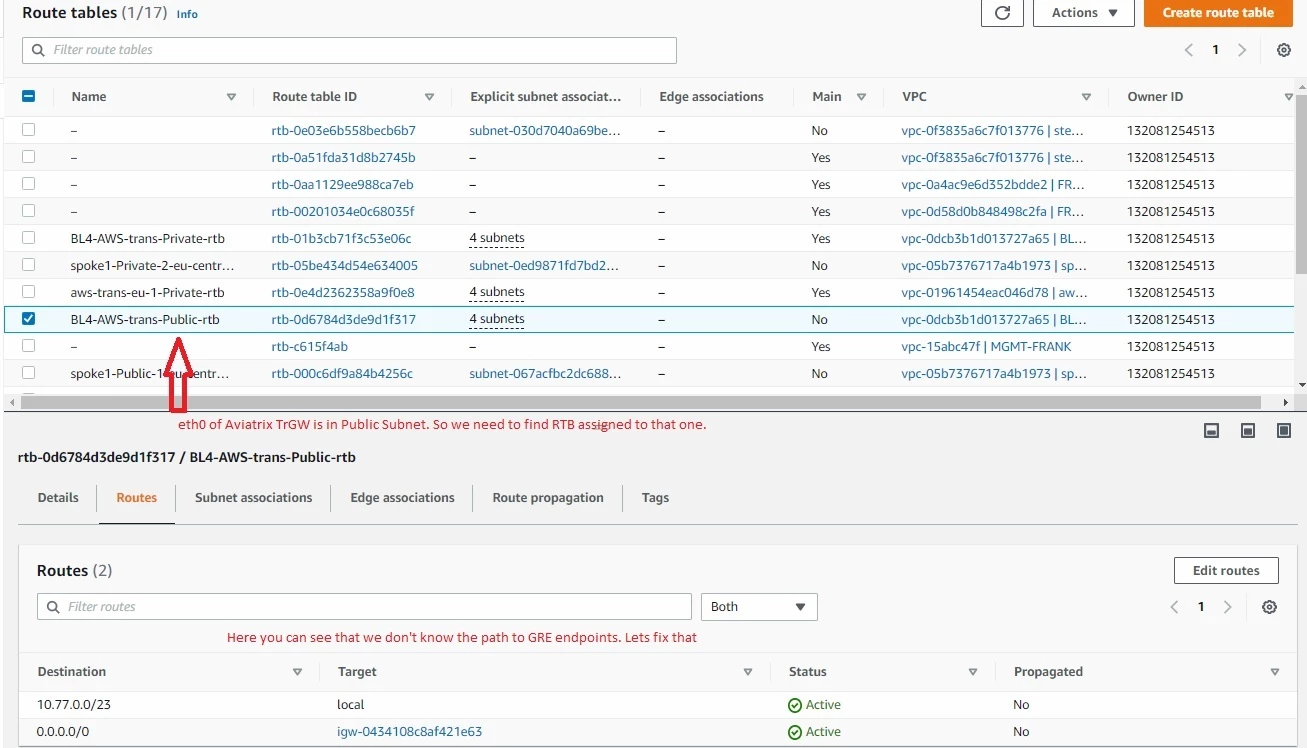

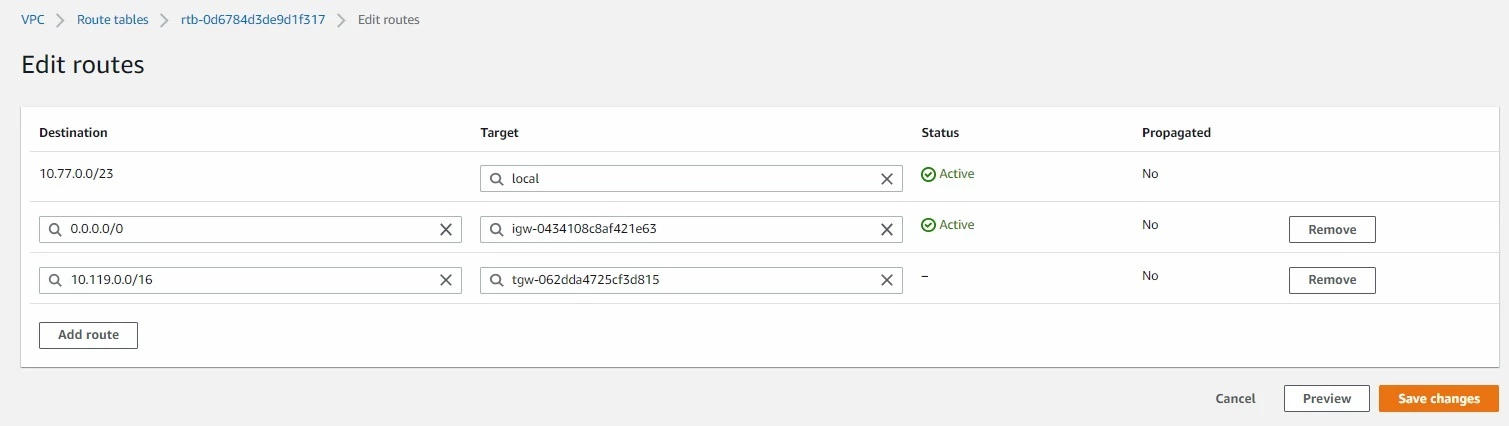

STEP 7 - AWS - modify RTB on Aviatrix VPC

We made an attachment from Aviatrix VPC but our routing requires some adjustments. In order to build GRE we need to point 10.199.0.0/16 to TGW ENI (attachment).

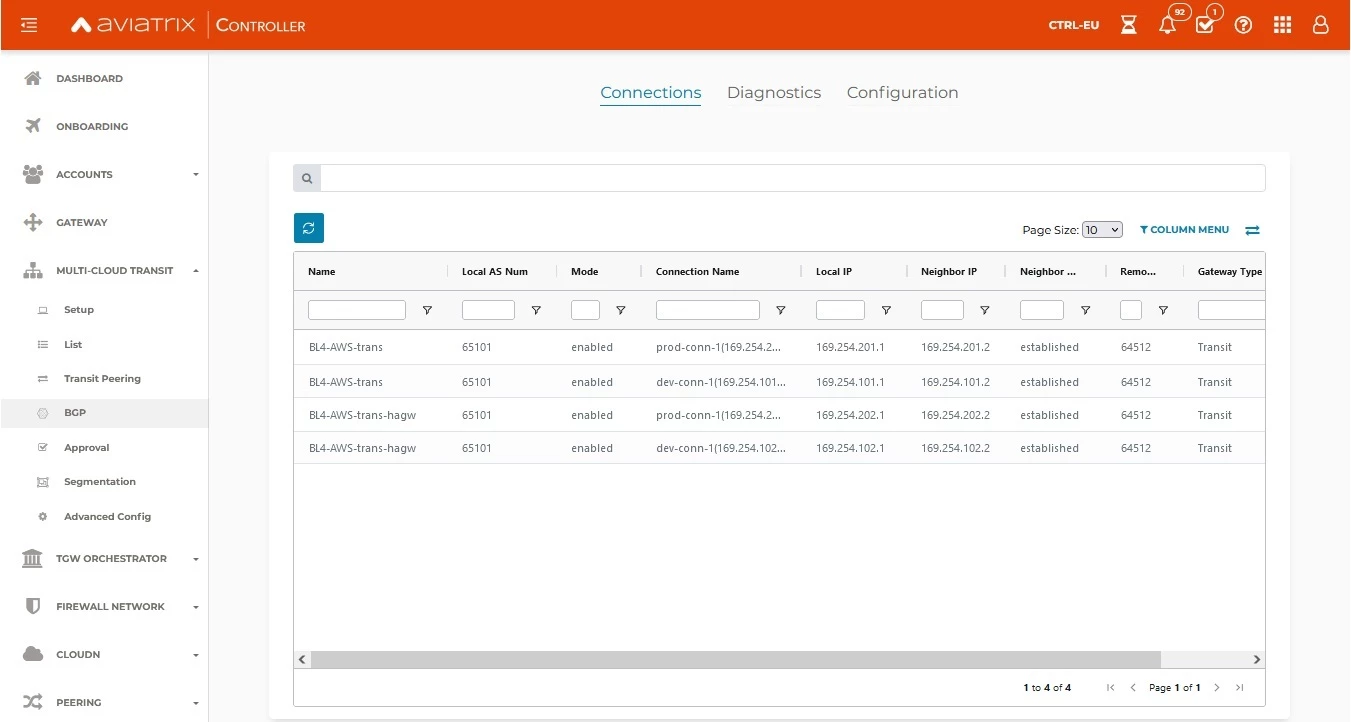

As soon as Routing is fixed we should see BGP UP. One important thing to notice is that only one BGP per connect peer will be UP. The reason for that is AWS TGW tries to build x2 GRE tunnels to one TrGW (we use /29 for that subnet), and Aviatrix is pretty strict allowing /30 for LOCAL, REMOTE tunnels.

We can verify that on Aviatrix GW as well (new view for BGP):

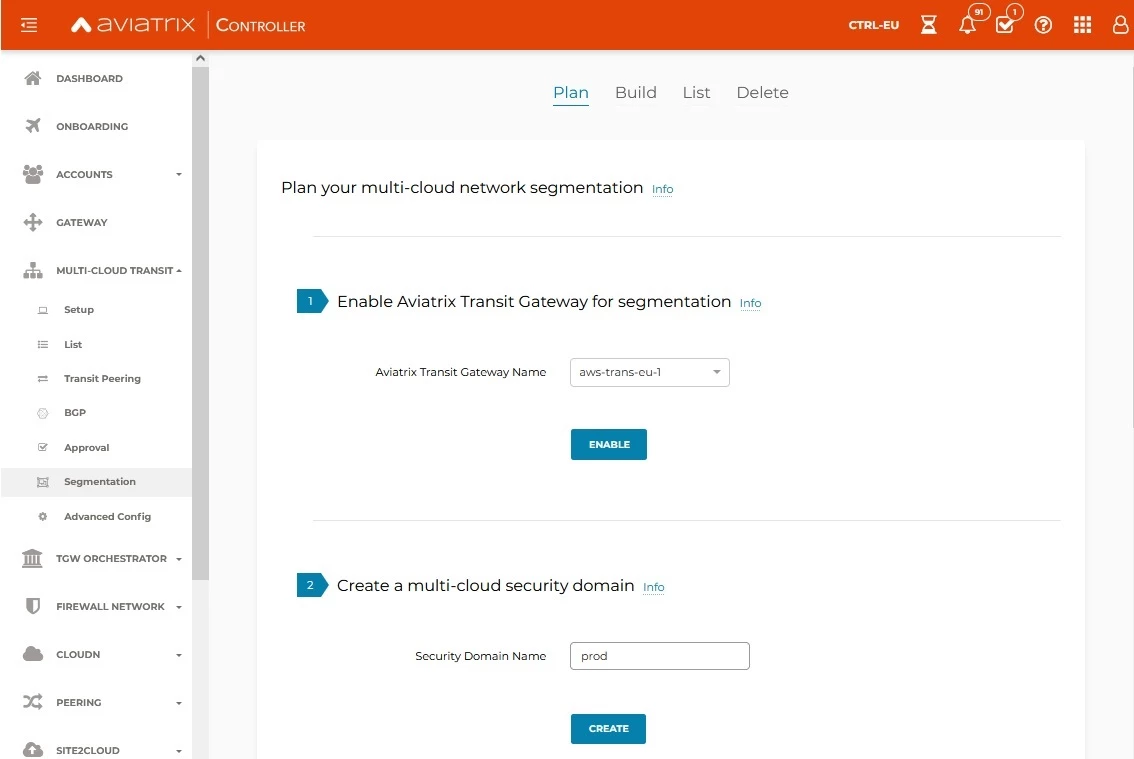

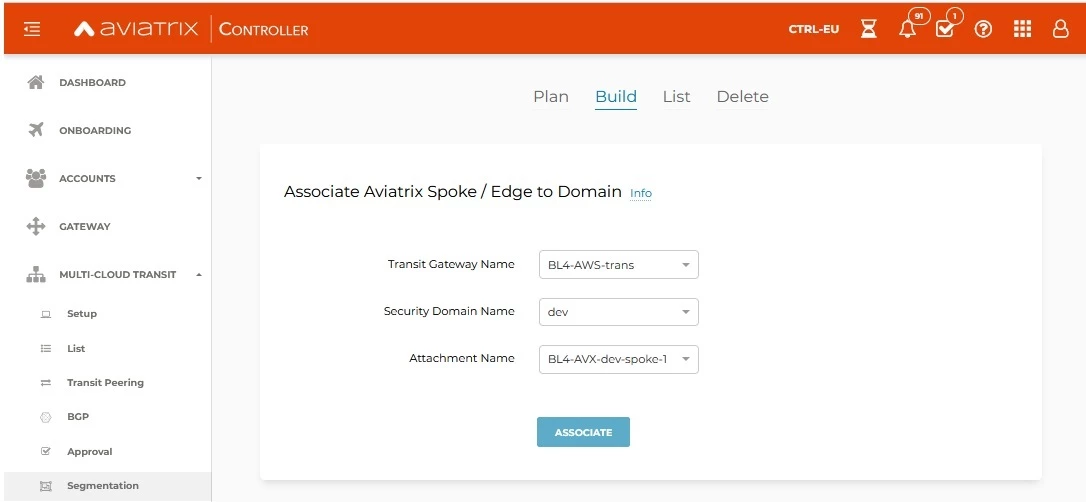

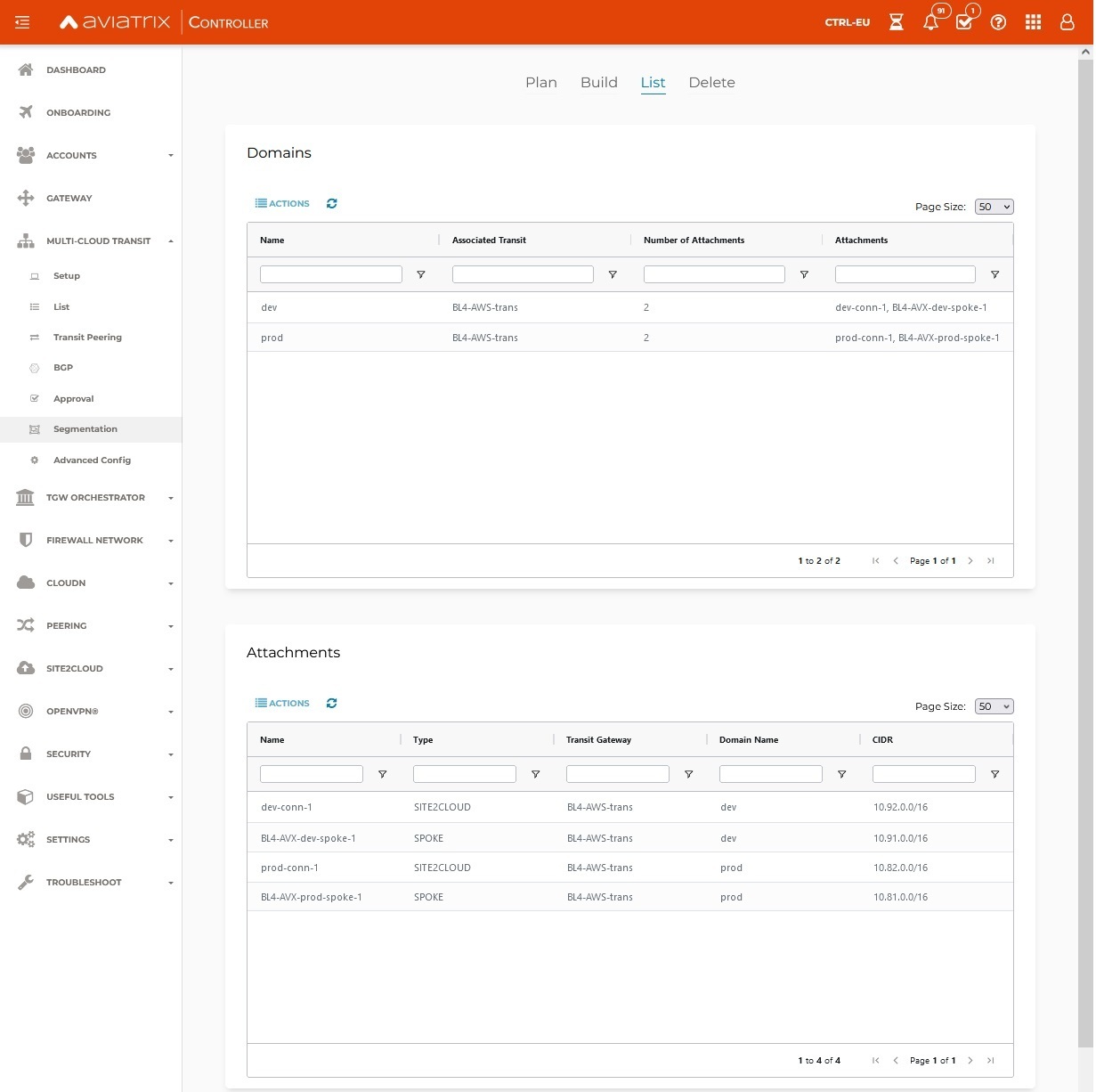

STEP 8 - AVIATRIX - Network Domains

That part we covered in previous post but quick recap:

– enable segmentation on TrGW

– create network domains

– Associate Spokes and Connection to appropriate domain

The list of all attachments should look like this:

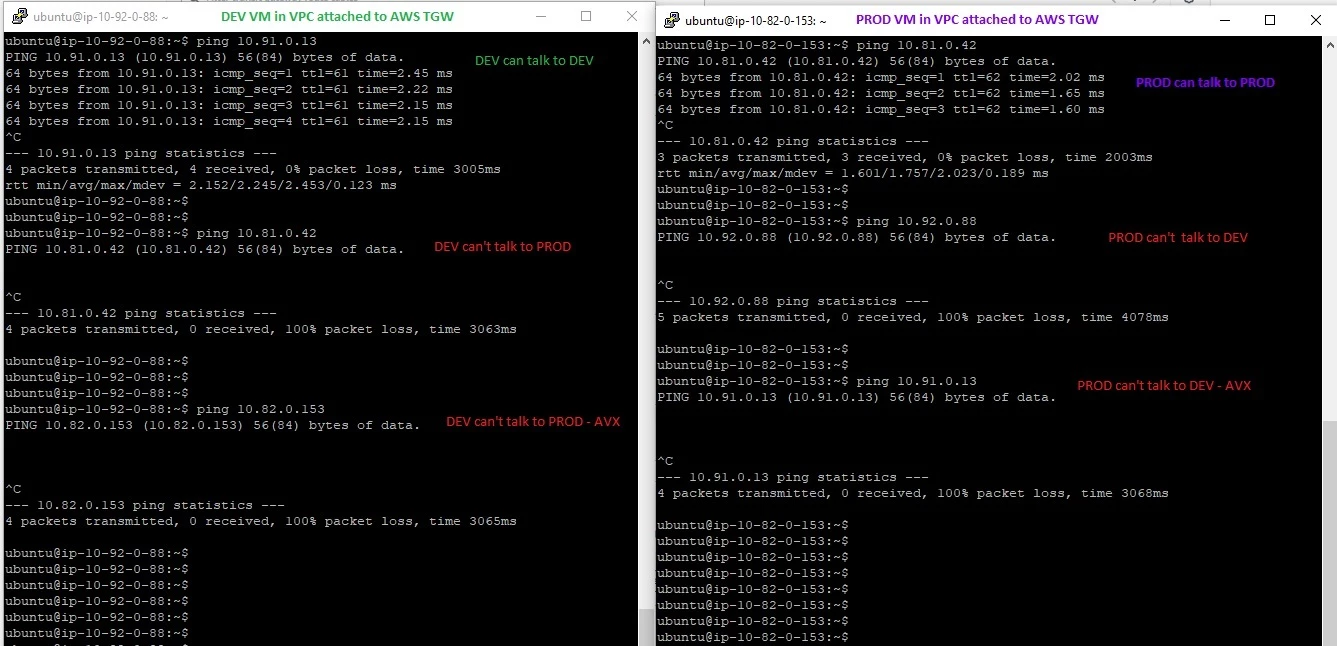

TESTING CONNECTIVITY

High Performance Encryption

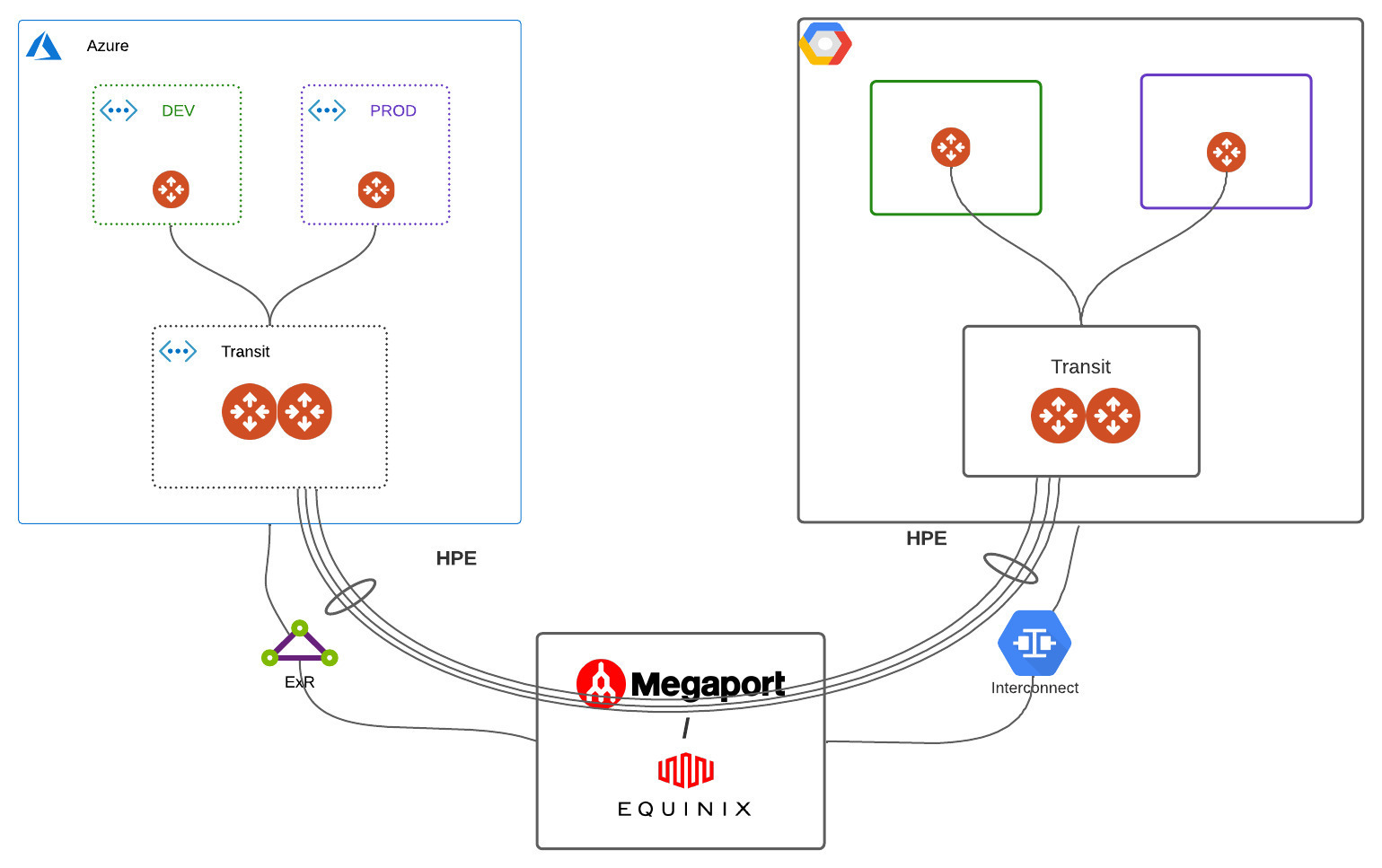

We were focusing on AWS side so far. Going fully multicloud is not a problem at all. What about our throughput requirement there? Our primary reason to use GRE in AWS was high bandwidth. Building individual IPSEC tunnels between different clouds would not provide us that. Luckily between AWS and AZURE we can leverage Aviatrix HPE – High Performance Encryption.

Why not GCP?

The concept of HPE is to build many IPSEC tunnels as single connection. To do that we require multiple Public IPs being assinged to VM (Aviatrix GW). GCP doesn’t allow that.

But …

If we use Private IP via Express Route and Interconnect we can achieve that.

Infrastructure as Code:

in AWS part – Connect itself and all of associations, propagation, peers are defined below (example based on PROD only)

#————————————– update – PROD

resource “aws_ec2_transit_gateway_connect” “connect_PROD” {

transport_attachment_id = aws_ec2_transit_gateway_vpc_attachment.TGW_attachment_AVIATRIX_VPC.id

transit_gateway_id = aws_ec2_transit_gateway.TGW.id

transit_gateway_default_route_table_association = false

tags = {

Name = “BL4-GRE-PROD”

}

}

resource “aws_ec2_transit_gateway_route_table_association” “RT_ass_connect_to_RT-PROD” {

transit_gateway_attachment_id = aws_ec2_transit_gateway_connect.connect_PROD.id

transit_gateway_route_table_id = aws_ec2_transit_gateway_route_table.TGW_RT_PROD.id

}

resource “aws_ec2_transit_gateway_route_table_propagation” “propagation_Connect_to_RT-PROD” {

transit_gateway_attachment_id = aws_ec2_transit_gateway_connect.connect_PROD.id

transit_gateway_route_table_id = aws_ec2_transit_gateway_route_table.TGW_RT_PROD.id

}

resource “aws_ec2_transit_gateway_connect_peer” “BL4-PROD-AVX-primary” {

peer_address = module.aws_transit_eu_1.transit_gateway.private_ip

transit_gateway_address = “10.119.200.1”

inside_cidr_blocks = [“169.254.201.0/29”]

bgp_asn = “65101”

transit_gateway_attachment_id = aws_ec2_transit_gateway_connect.connect_PROD.id

tags = {

Name = “BL4-PROD-AVX-primary”

}

}

resource “aws_ec2_transit_gateway_connect_peer” “BL4-PROD-AVX-ha” {

peer_address = module.aws_transit_eu_1.transit_gateway.ha_private_ip

transit_gateway_address = “10.119.200.2”

inside_cidr_blocks = [“169.254.202.0/29”]

bgp_asn = “65101”

transit_gateway_attachment_id = aws_ec2_transit_gateway_connect.connect_PROD.id

tags = {

Name = “BL4-PROD-AVX-ha”

}

}