What is AWS Fargate for ECS?

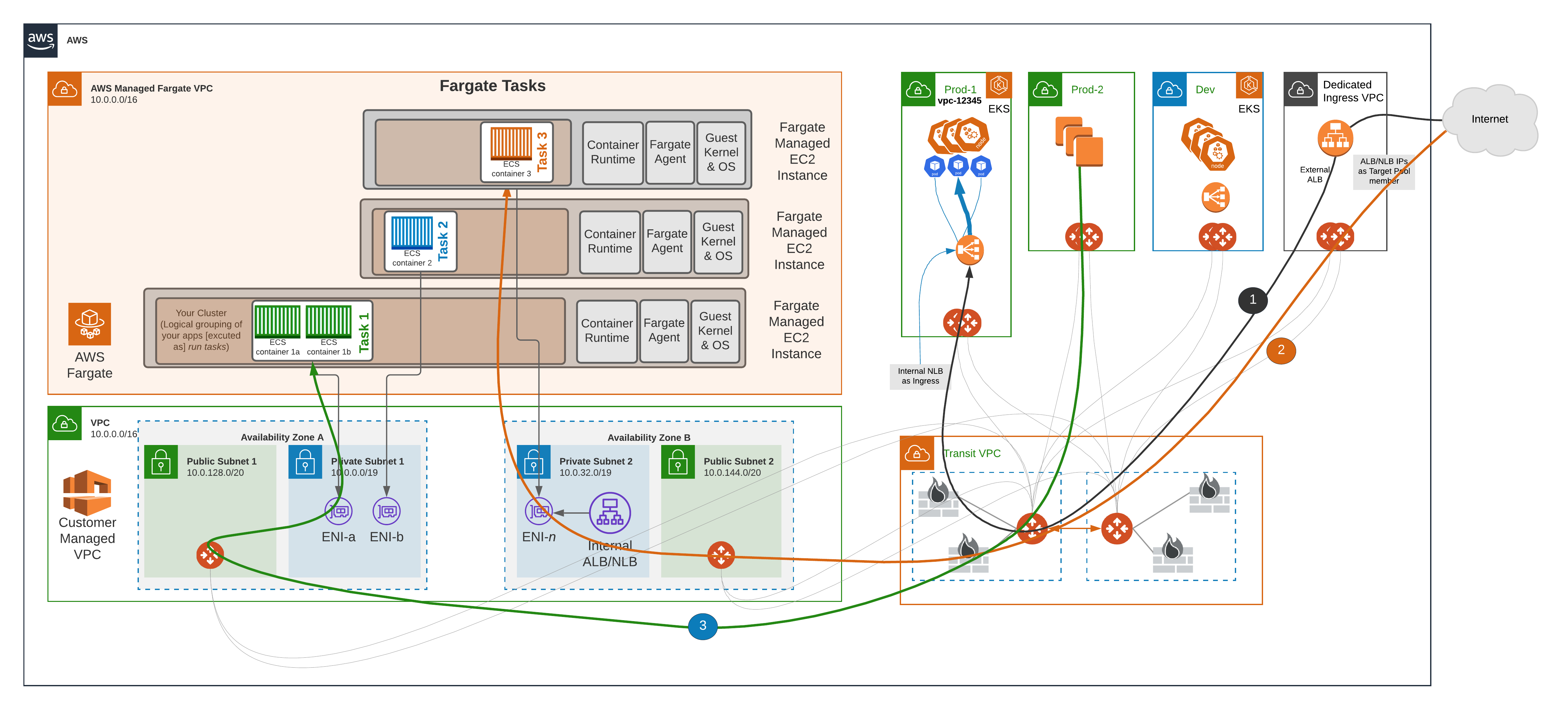

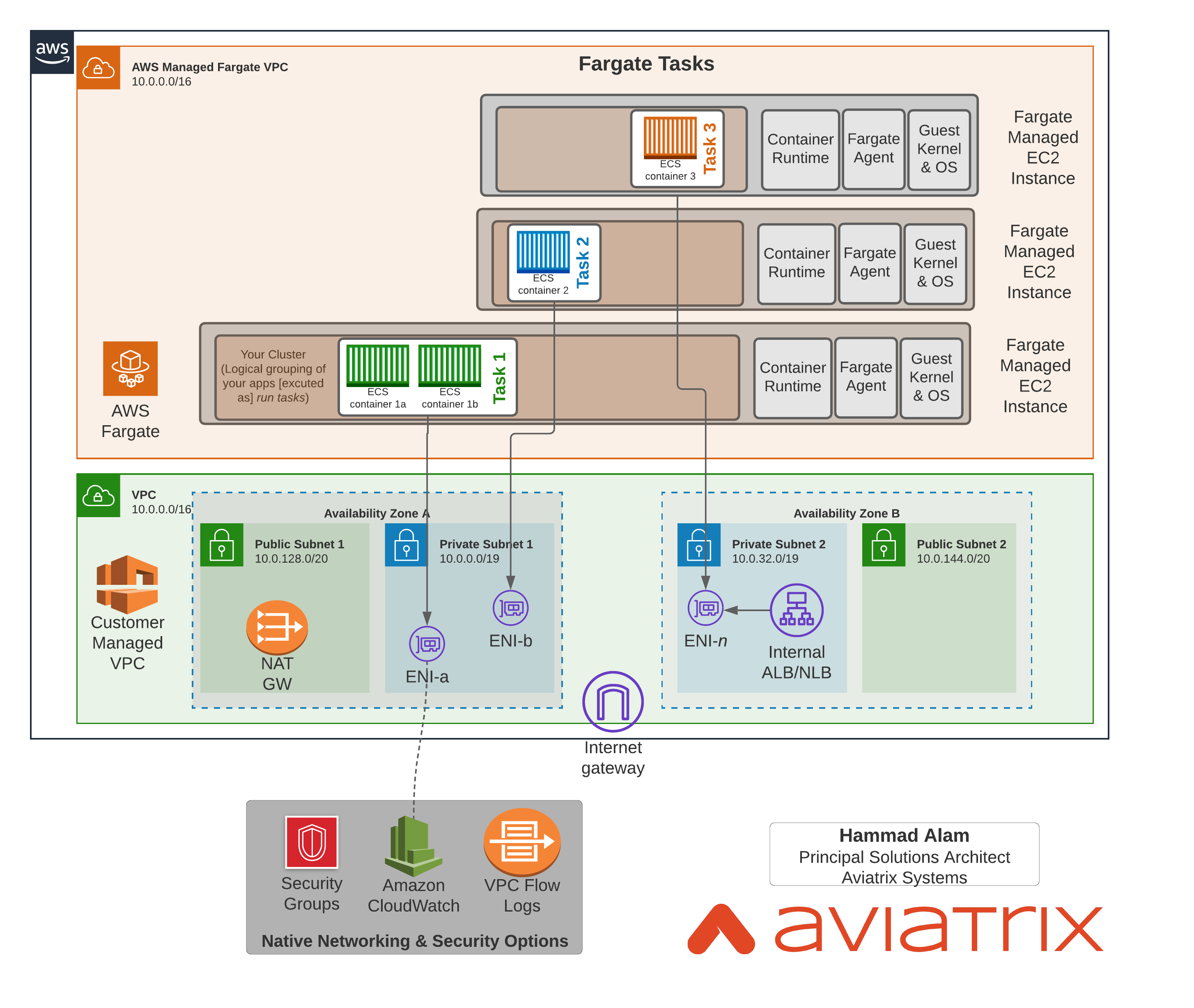

AWS Fargate is a Serverless Container platform where you can run containers on EC2 instances managed by AWS. This relieves customers from provisioning, configuring, or scaling of VMs to run containers. The compute instances (powered by Firecracker) are microVMs that run in AWS managed VPCs (invisible to customers).

NOTE: There is another way of consuming AWS Fargate with Amazon EKS which we are not discussing in this blog. However, if that is of interest, please do reach out.How does AWS Fargate work?

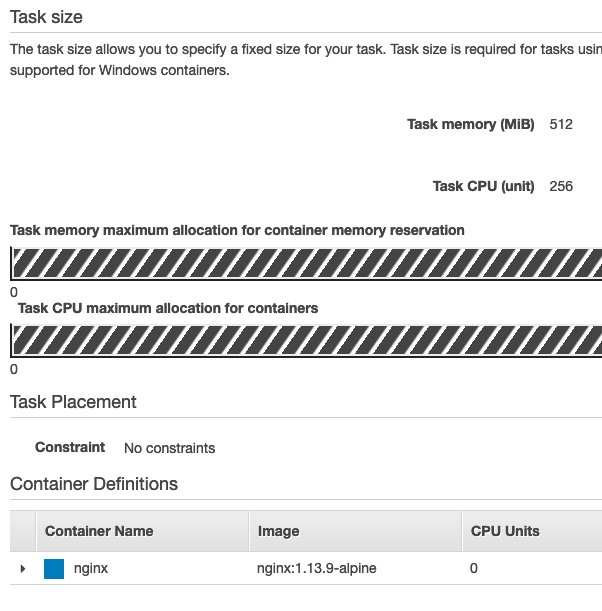

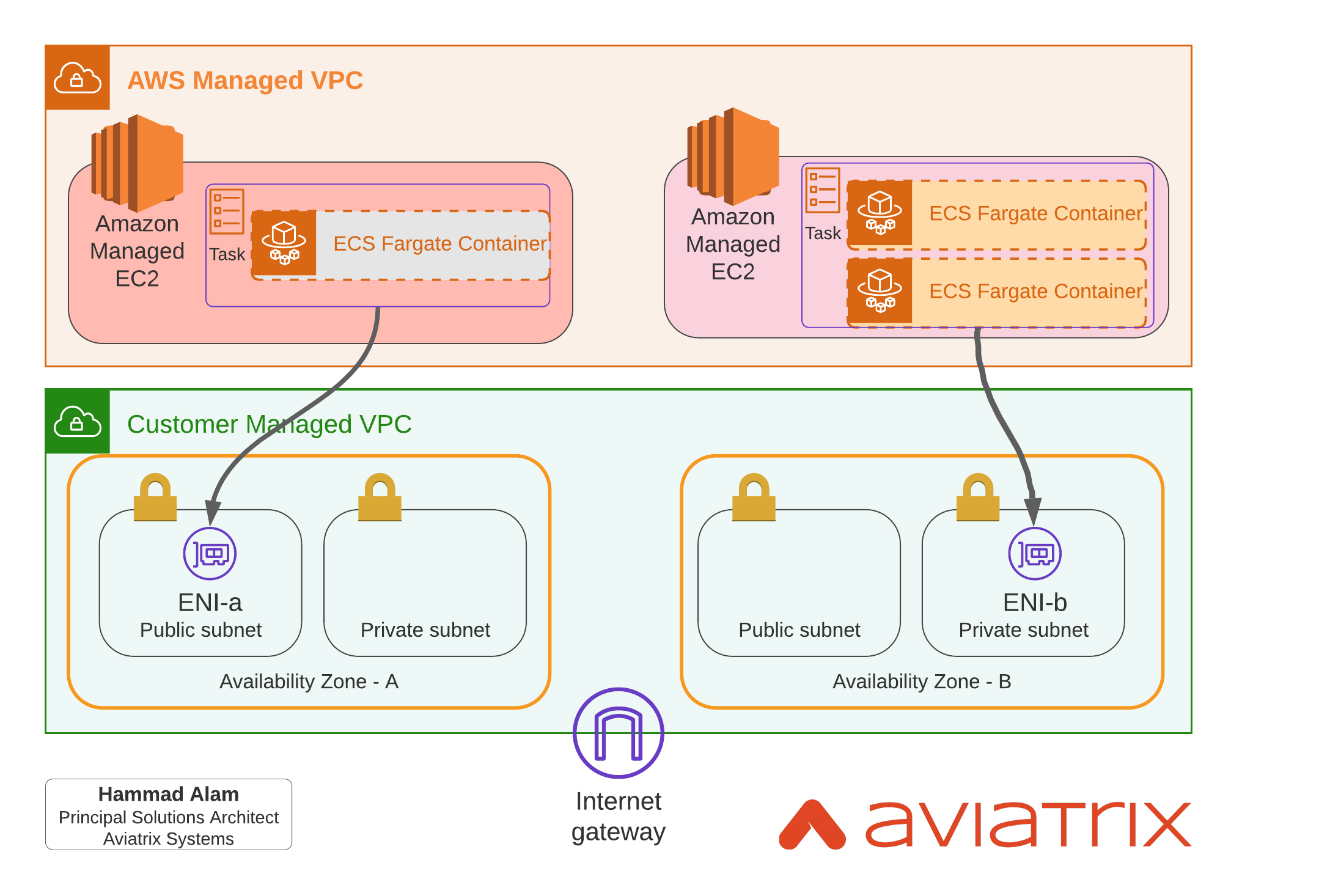

To deploy container(s) using AWS Fargate, customer need to define a Task specifying its CPU and Memory needs. Behind each Task, AWS provisions a microVM (EC2 instance equivalent) in an AWS Managed VPC.

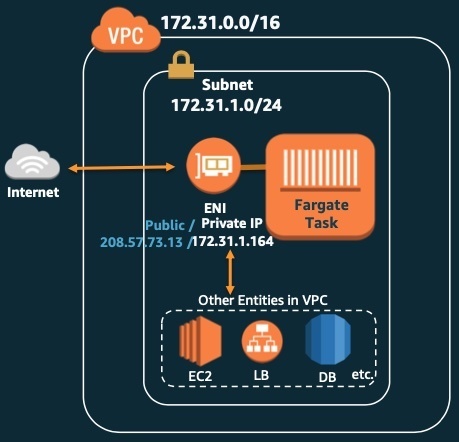

An ENI is created in the customer VPC for each Task where each Task may have one more container running in them. You can optionally attach a pre-existing Load Balancer to frontend the container.

What happens from Networking perspective when you run a Task?

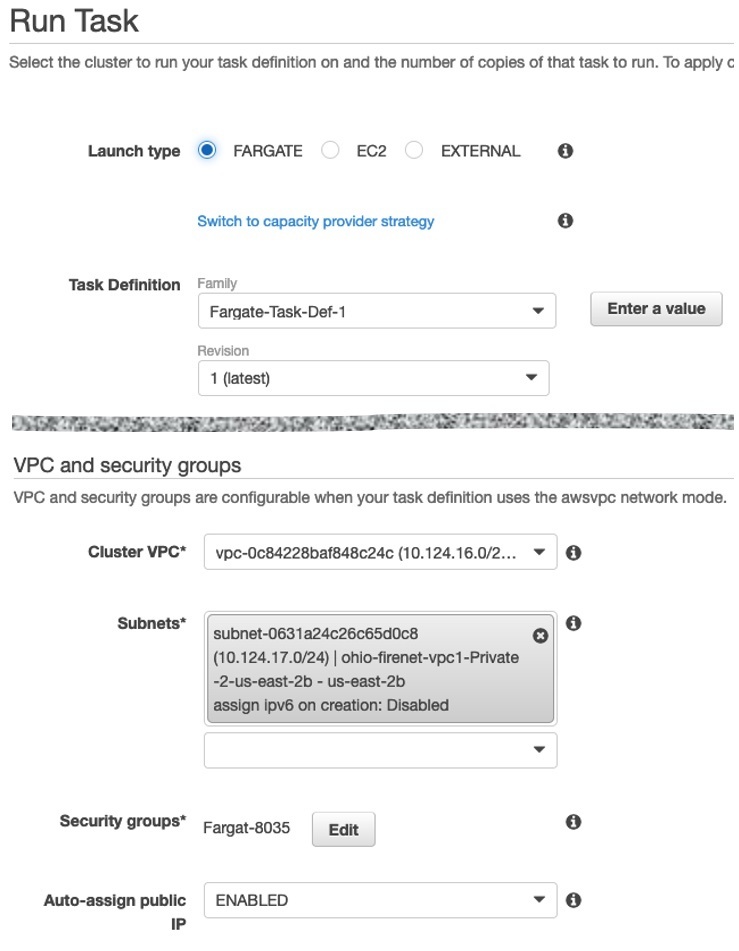

When running the Task, you are given the option to choose a VPC, subnet, ability to assign public IP and Security Group. With these choices, the Task's ENI is attached to the appropriate subnet and given a Private and optionally public IP address.

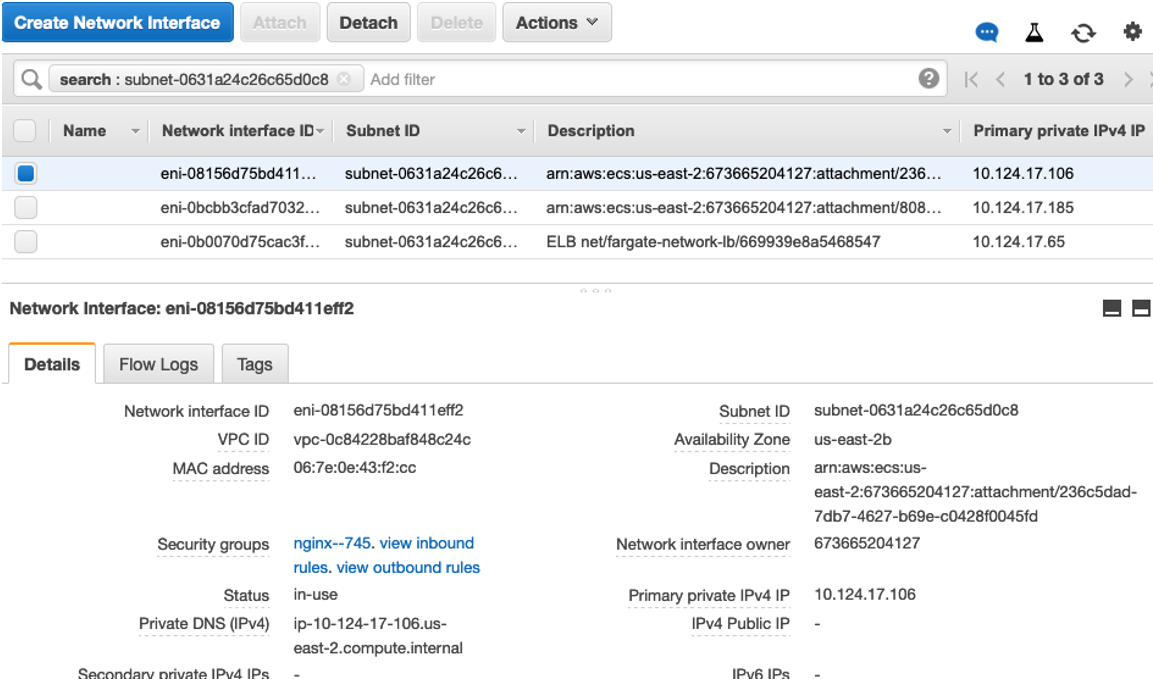

The above setting will result in the ENI being attached to the specified subnet.

The Task ENI will show up in your Network Interfaces as any other EC2 ENI with a Private (and Public) IP from the subnet range.

The Task ENI will show up in your Network Interfaces as any other EC2 ENI with a Private (and Public) IP from the subnet range.

What Networking and Security features for AWS Fargate are provided by AWS?

AWS Fargate simplifies the deployment and compute/storage management of the containers however the networking and security of containers is solely customers to manage. Some of the networking and security functionalities AWS provides natively include:

- Automatically attach the Task ENI to appropriate subnet

- Security Groups attached to the ENI of the Container or LB (customer managed)

- Ability to enable Amazon CloudWatch Log integration

- Ability to enable VPC Flow Logs

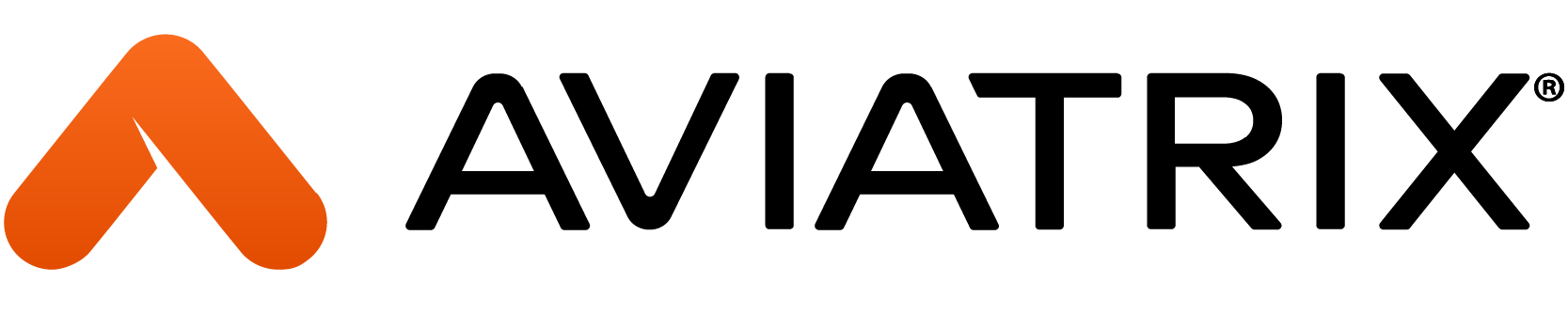

Following diagram shows how Fargate networking works. As you can see, each Task has a dedicated EC2 instance in AWS Managed VPC which has an ENI landed in the subnet. Once that happens, now its customers responsibility to manage connectivity from this ENI to wherever it needs to go.

What are some of the networking and security needs that are customer’s responsibility?

What are some of the networking and security needs that are customer’s responsibility?

Some of the networking and security traffic pattern examples from the time the Task is run are:

- Container image needs to be pulled which requires networking, internet access or access to Container Registry

- Access to AWS EC2, CloudWatch and other services

- Egress access to resources on the internet

- Ingress Access to the application from internet

- Apps living in other VPCs, regions, on-prem, cloud etc need access to this container

- Security, compliance, and audit requirements

- Day2 Operations

All the above are the areas where Aviatrix Platform can help. Aviatrix helps customers manage the VPC routing, controlling ingress, egress, east-west and north-south traffic patterns. In addition, Aviatrix encrypts the data plane, simplifies security service insertion and bring unprecedented visibility to customer.

Following picture demonstrates how Aviatrix Platform provides simplified networking and security for AWS Fargate containers without making any change to the architecture.

Note: Above diagram shows a lot more use cases and traffic patterns then covered in this blog.If you were to highlight two common pain points for AWS Fargate admins, what would they be?

The above diagram covers several use cases, let’s look some of the most common questions I hear from customers.

Problem#1: Controlling Egress Traffic from AWS Fargate

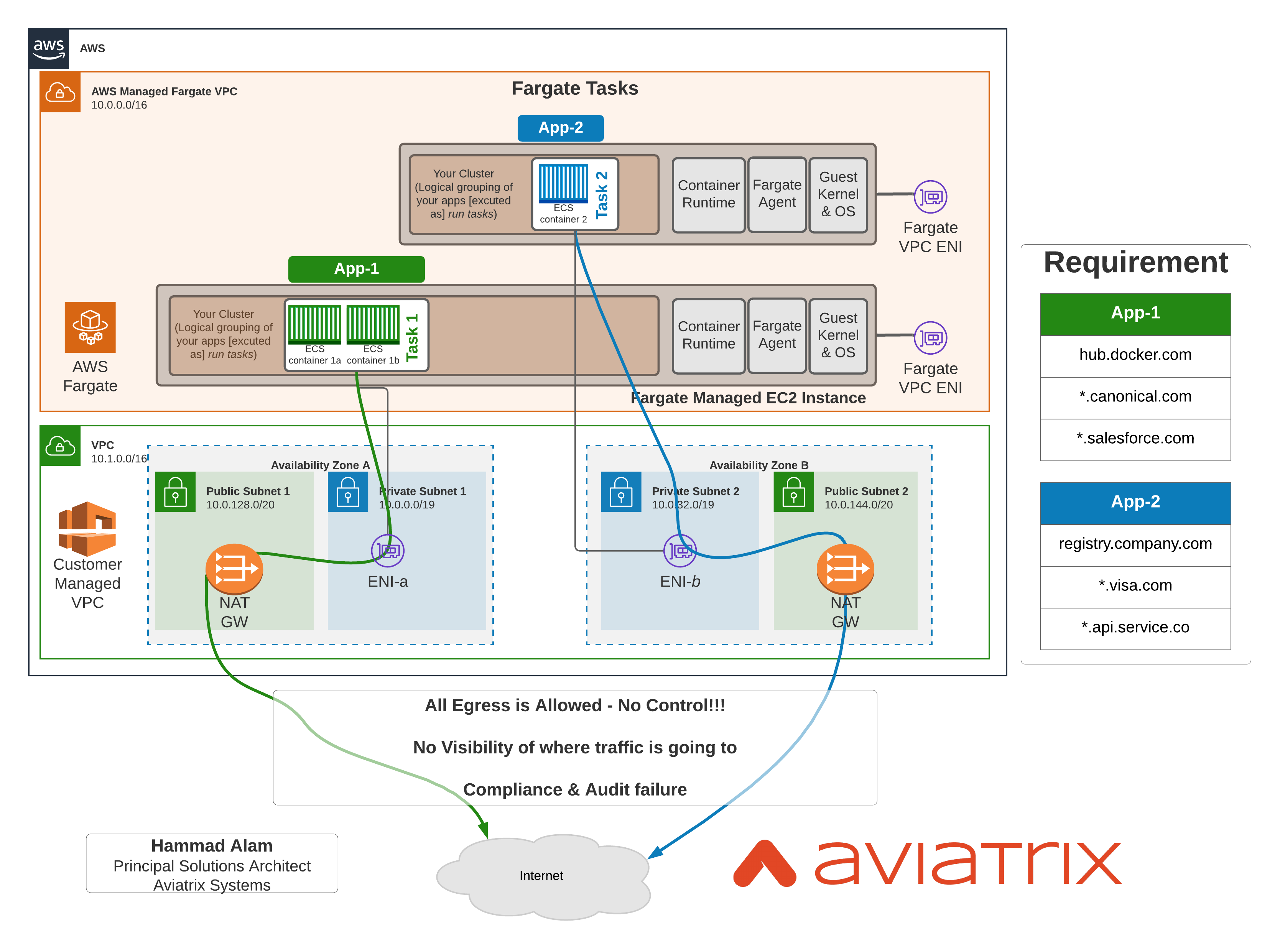

Basic networking patterns of Fargate show containers traffic egressing natively via a AWS NAT Gateway to internet. AWS NAT Gateway does provide internet connectivity however there is no control over this. You cannot limit where the traffic goes, for ex:

- Are tasks only going to approved container registries?

- Is there any data exfoliation happening where container may be leaking data to unapproved destinations?

- Is traffic to S3 limited to approved buckets?

There are many ways Aviatrix can simplify this problem. For ex:

There are many ways Aviatrix can simplify this problem. For ex:

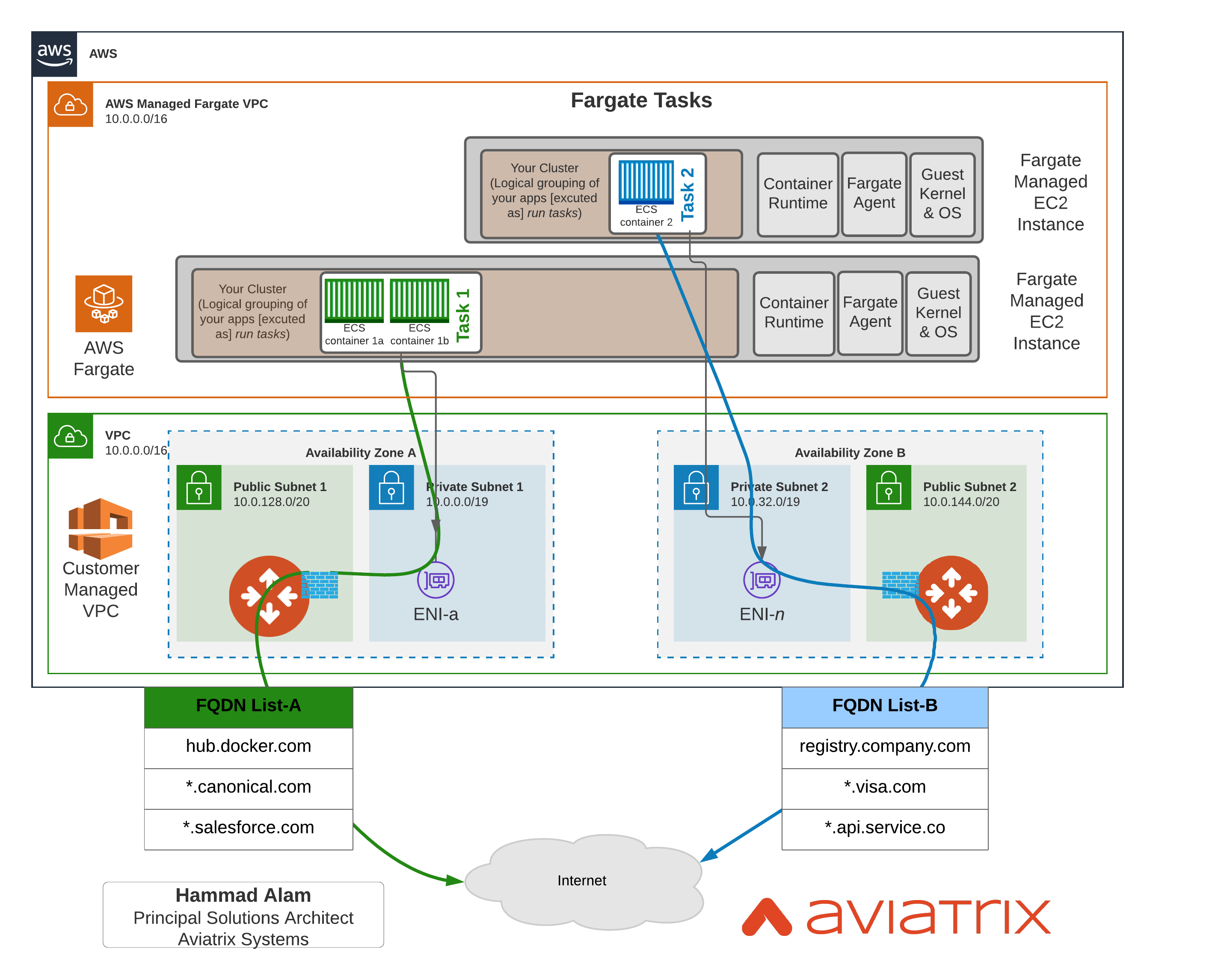

- Decentralized FQDN Egress Gateway in each VPC

- Centralized FQDN Egress Gateways for several VPCs

- Insert NextGen Firewall in a centralized transit design

- S3 Gateways to limit traffic to approved buckets

Let’s look at option 1 in following diagram where Aviatrix Egress FQDN Gateways can limit traffic to approved destinations only.

Problem#2: Day2 Ops: Visibility, Troubleshooting, Control

Problem#2: Day2 Ops: Visibility, Troubleshooting, Control

With containers being ephemeral and traffic egressing and ingressing from VPC to other VPCs, internet, on-prem, other regions etc., troubleshooting becomes a nightmare for operational teams to figure out what is the application talking to and what is breaking.

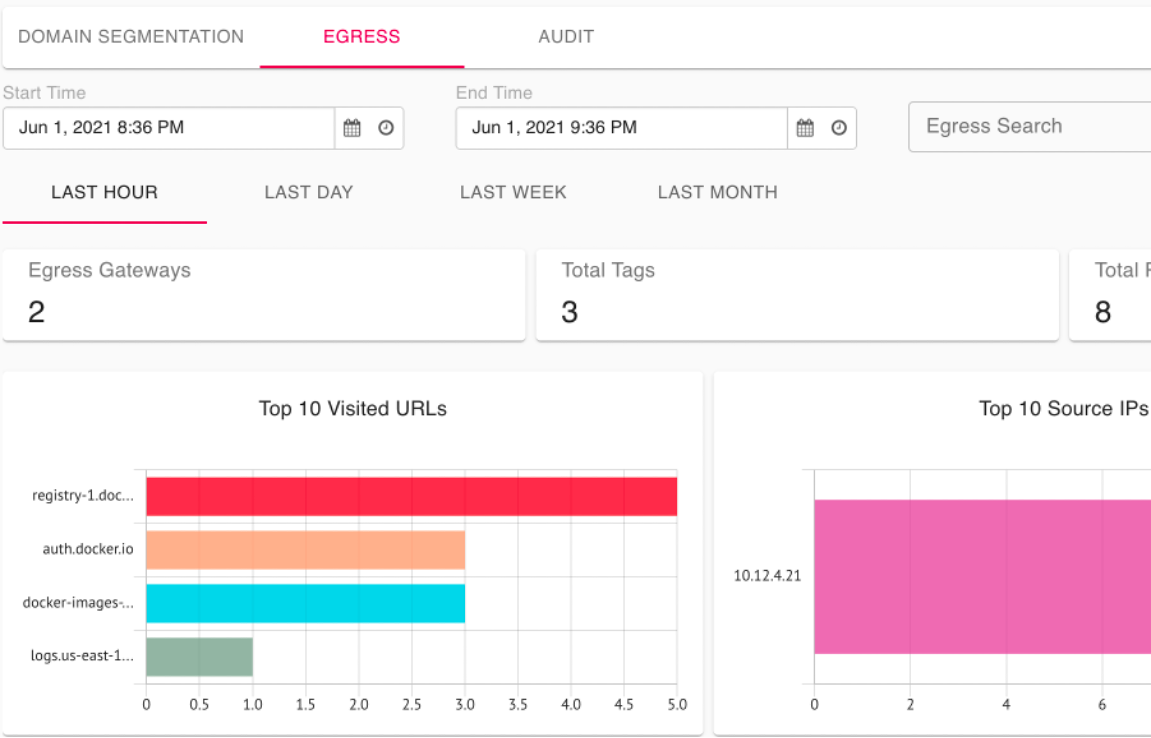

For ex, if the container is egressing, where is it going. Aviatrix Egress FQDN can discover all the traffic and you can enforce it at a click as well. Here's some example log outputs:

Discovered 4 visited sites from VPC of nv-transit-cen-egress-spoke-agw:

registry-1.docker.io,tcp,443

docker-images-prod.s3.amazonaws.com,tcp,443

auth.docker.io,tcp,443

logs.us-east-1.amazonaws.com,tcp,443

=========================================================

Search results on Gateway nv-transit-cen-egress-spoke-agw

=========================================================

2021-06-02T01:34:58.901794+00:00 GW-nv-transit-cen-egress-spoke-agw-34.192.189.130 avx-nfq: AviatrixFQDNRule2[CRIT]nfq_ssl_handle_client_hello() L#291 Gateway=nv-transit-cen-egress-spoke-agw S_IP=10.12.4.21 D_IP=54.152.28.6 hostname=registry-1.docker.io state=NO_MATCH

2021-06-02T01:34:58.944827+00:00 GW-nv-transit-cen-egress-spoke-agw-34.192.189.130 avx-nfq: AviatrixFQDNRule0[CRIT]nfq_ssl_handle_client_hello() L#291 Gateway=nv-transit-cen-egress-spoke-agw S_IP=10.12.4.21 D_IP=35.175.91.243 hostname=auth.docker.io state=NO_MATCH

2021-06-02T01:34:59.241346+00:00 GW-nv-transit-cen-egress-spoke-agw-34.192.189.130 avx-nfq: AviatrixFQDNRule1[CRIT]nfq_ssl_handle_client_hello() L#291 Gateway=nv-transit-cen-egress-spoke-agw S_IP=10.12.4.21 D_IP=52.46.154.122 hostname=logs.us-east-1.amazonaws.com state=NO_MATCH

2021-06-02T01:34:59.472281+00:00 GW-nv-transit-cen-egress-spoke-agw-34.192.189.130 avx-nfq: AviatrixFQDNRule3[CRIT]nfq_ssl_handle_client_hello() L#291 Gateway=nv-transit-cen-egress-spoke-agw S_IP=10.12.4.21 D_IP=52.216.132.43 hostname=docker-images-prod.s3.amazonaws.com state=NO_MATCHAviatrix CoPilot can show this in a UI

There are several other visibility aspects provided by Aviatrix such as:

There are several other visibility aspects provided by Aviatrix such as:

- Complete Netflow data

- Historical trending and abnormalities

- Recording network changes

- Ability to run ping, traceroute, tracepath (MTU issues), telnet etc.

- Ability to take packet captures

Summary:

AWS Fargate is a Serverless service for containers but the networking, security, regulatory and network operational responsibilities are the same as traditional EC2 instances. Aviatrix platform offers a variety of solutions in this realm, some of which were discussed above. For additional questions or discussions, please feel free to reach out to the author or info@aviatrix.com.

References:

One important dimension that was not discussed here is securing ingress traffic. James Devine has an excellent blog post talking about “Patterns for Centralized EKS Ingress” which is equally applicable to AWS Fargate.

For detailed designs on Amazon EKS service, please refer to whitepaper: Cloud Network Architectures for Kubernetes Workloads.