Security in the cloud is just as important, if not more so, than on-premises. There is a delicate balance between putting appropriate guard rails in place and giving DevOps teams the autonomy they need to get their work done efficiently. This is compounded with new deployment frameworks like Kubernetes (K8s) which can prove complicated, even when using a managed K8s service like AWS EKS. The great news is that it’s not an either-or decision between moving quickly and operating securely. It is possible to achieve both with Aviatrix!

If you think about a common on-premises architecture, you most certainly have firewalls (many in large organizations). These traditional firewalls still have a place in the cloud and can be seamlessly implemented with the Aviatrix FireNet solution. We can leverage next-generation firewalls in addition to taking advantage of cloud-native security services.

In this post, I’ll discuss common architectures we see our customers use to deploy secured Kubernetes workloads and dig into the caveats and gotchas.

Why centralize ingress?

In a typical on-premises world it is often a lengthy process to provision a new service. A DevOps team would quite rarely be the same team that is deploying infrastructure or writing firewall rules. Cloud changes this as it possible for teams to have more autonomy over their AWS environment. However, with great power comes great responsibility. It’s important to place guard rails in place to ensure you can both move fast and do so securely. However, it is a delicate balance. Overly restrictive security policies can end up hamstringing developers and stifle innovation.

Centralizing ingress can be a great way to separate out the roles of DevOps teams deploying their K8s clusters while still ensuring networking and security teams can put in place requisite controls. This pattern gives centralized control over all the traffic flowing into a service. Service insertion becomes simplified and it’s still possible to integrate with cloud-native services. There are also economies of scale to centralizing a set of services to use a shared security stack.

Single Load Balancer Architecture

By default, if you expose services on an EKS it will use a classic load balancer (CLB). This is pretty old, but you can also choose to use a network load balancer (NLB) for a newer generation layer-4 load balancer. Neither is particularly useful for an ingress design. It’s also not the best from a security and operations perspective to have the EKS service deploy internet-facing load balancers.

This is where AWS’s Load Balancer Controller (formerly called the AWS ALB ingress controller) comes in handy. It allows more sophisticated and granular control over load balancing and allows the use of layer-7 application Load balancers (ALBs). There is an option in the manifest file to specify “aws-vpc-id” which will determine what VPC is used to create resources. This option still places responsibility and control of deploying the load balancer with EKS.

A better design to separate out responsibilities is to use TargetGroupBindings. This is a new feature within the AWS Load Balancer Controller where the EKS service will maintain a load balancer target group, but not the actual load balancer. It allows the load balancer to be configured and managed outside of EKS. This works today within an EKS VPC with support for ELBs outside the EKS VPC coming in the future (there is a patch to make it work).

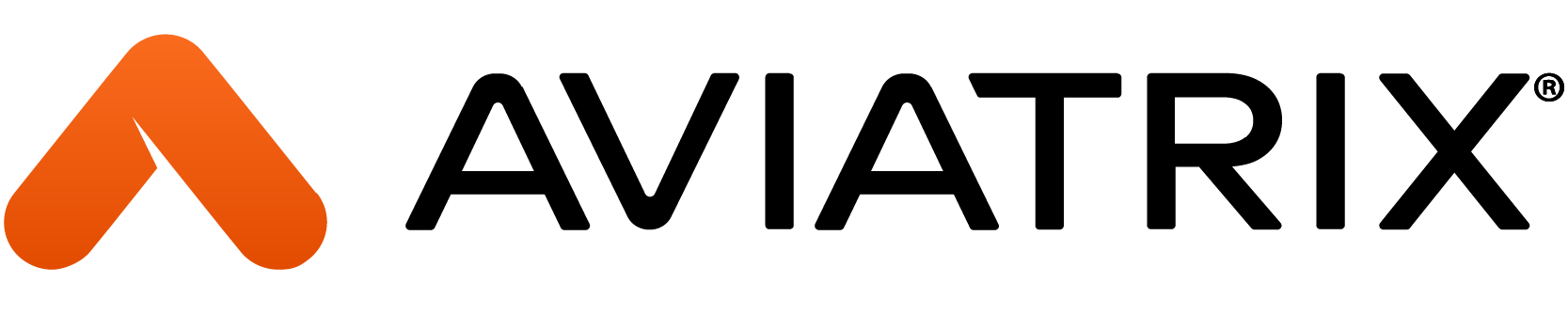

In both options the architecture looks the same, with a load balancer running in a VPC outside of the one that is hosting the EKS worker nodes and pods. This is shown in the diagram blow. Traffic enters the load balancer and can then be directed to FireNet for nextgen firewall insertion before being routed to the services running on EKS.

Multiple Load Balancer Architecture (ELB Sandwich)

Another option for ingress is to separate out load balancing responsibilities. This allows DevOps team to completely control their own destiny within their VPC. Networking and security controls can then be put into place to ensure no service are exposed publicly.

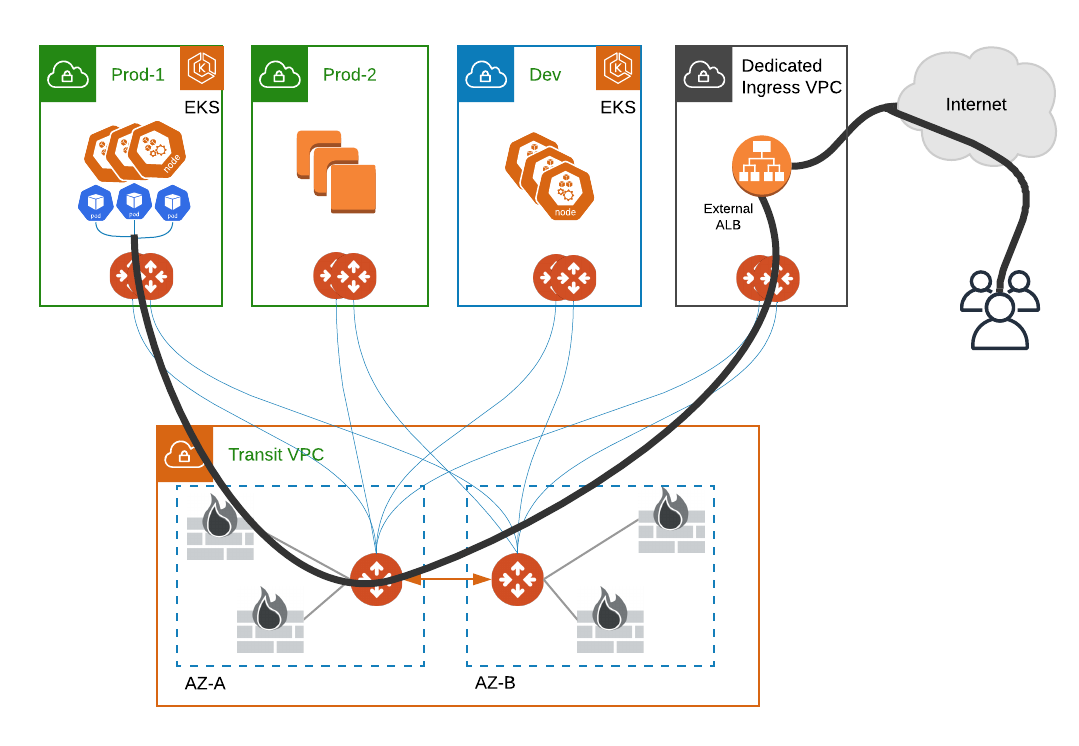

Within the EKS VPC a NLB is used to provide a static IP per AZ. It is possible to use an ALB for this, but then there is complicated orchestration of IP addresses as the ALB scales in and out (the IPs are dynamic and can change).

Just like in the single load balancing architecture, we have a dedicated ingress VPC. Here we place our internet-facing load balancer. This can be either an NLB or ALB. Using an ALB can allow consolidation of multiple services since the ALB supports multiple hostnames. In either case, an IP target group is created to target the private IP addresses of the load balancer in the EKS VPC. An advantage of this architecture is that the ingress VPC and EKS VPC(s) can span multiple AWS accounts for a reduced blast radius and separation of responsibilities. This architecture is shown below.

Insight and Visibility with Aviatrix

Using the Aviatrix transit brings along several benefits to a centralized ingress architecture. From an infrastructure perspective, FireNet take the heavy lifting out of deploying and integrating nextgen firewalls. I’ve done this manually myself in the past and best case it can take hours. It’s also difficult to troubleshoot. With FireNet we can deploy and integrate quickly through an intuitive GUI or codify in terraform.

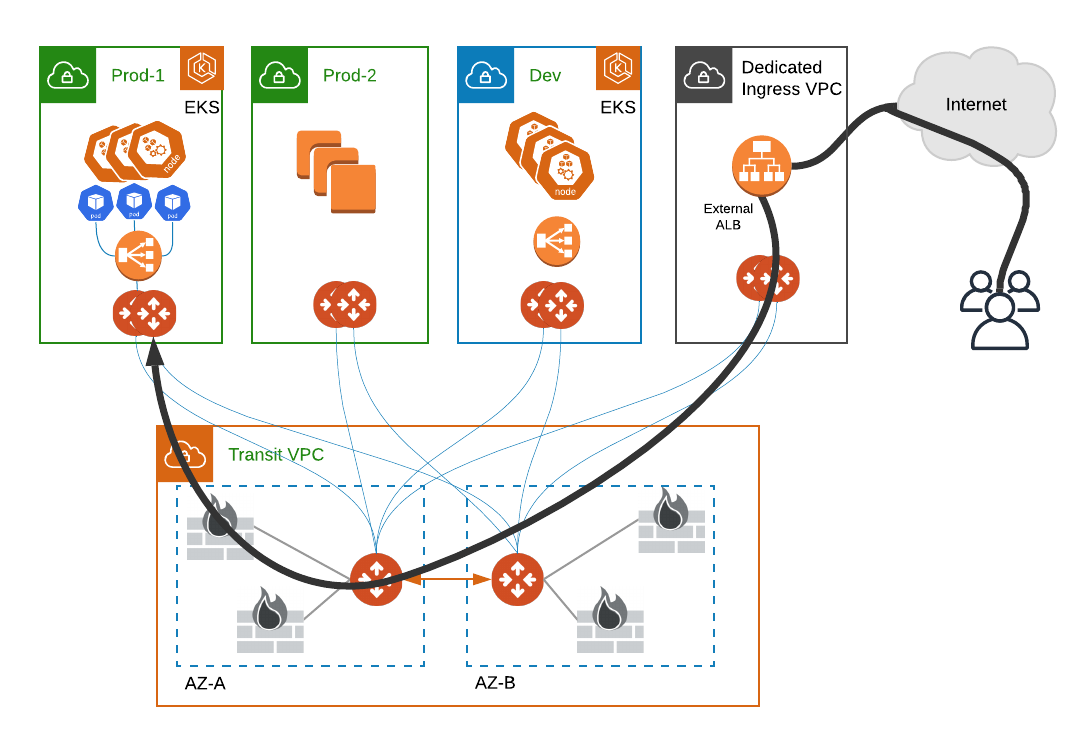

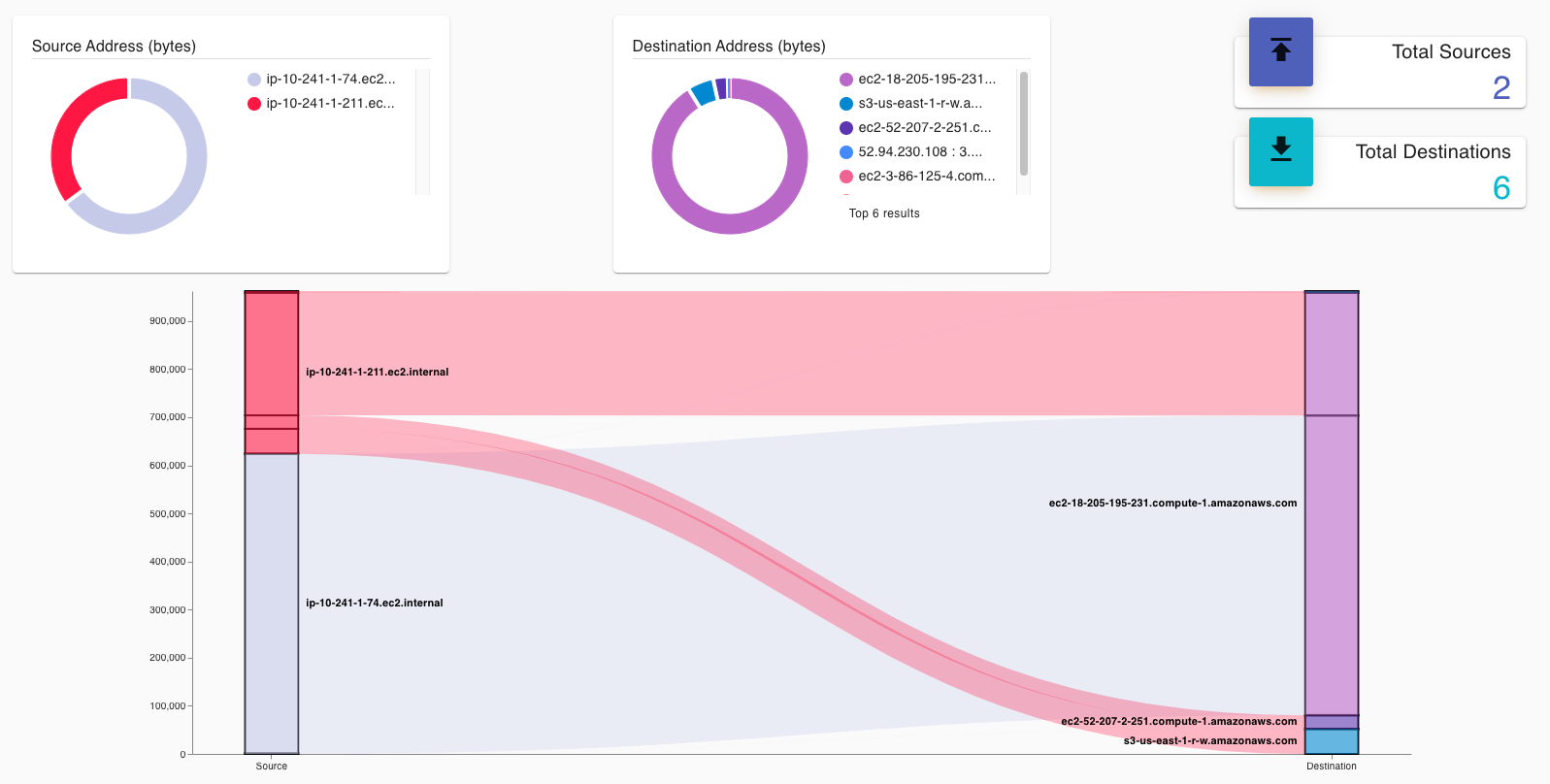

In addition to simplified nextgen service insertion, there is an unprecedented level of insight and visibility. Since all the traffic is flowing through Aviatrix gateways there is full visibility and control over traffic. Netflow data is exported to Copilot which allows for visualization and traffic analysis which is key to day-2 operations and troubleshooting.

In the screenshots below we can see a visual breakdown of all the traffic. EKS talks to several other AWS services and we have visibility into all this traffic!

It is also easy to identify top talkers and view volumes of traffic going out to external sources. In the following screenshot, for example, we can see the traffic going to the EKS control plane and S3.

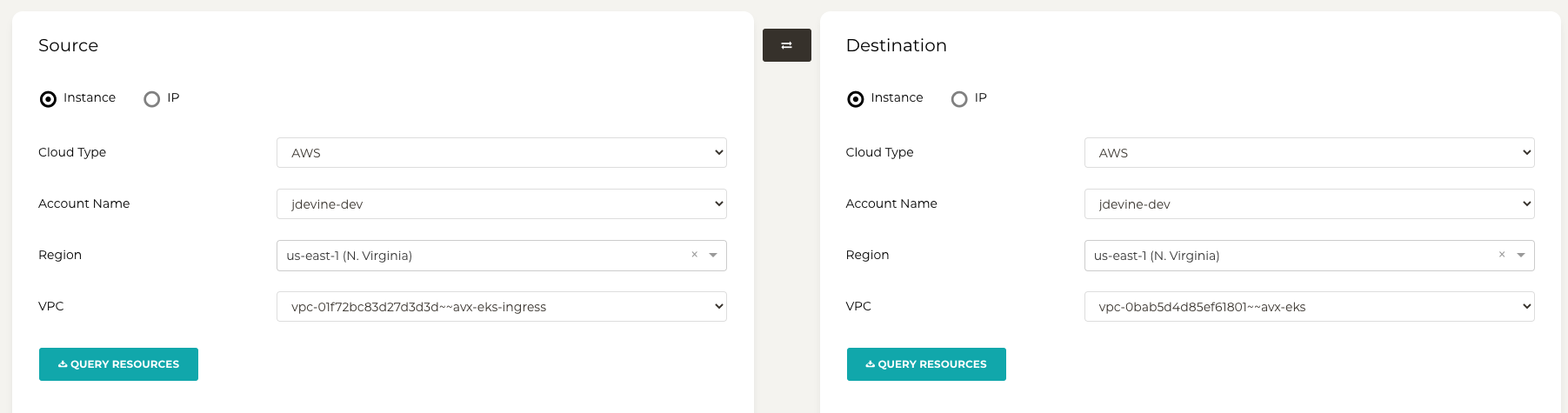

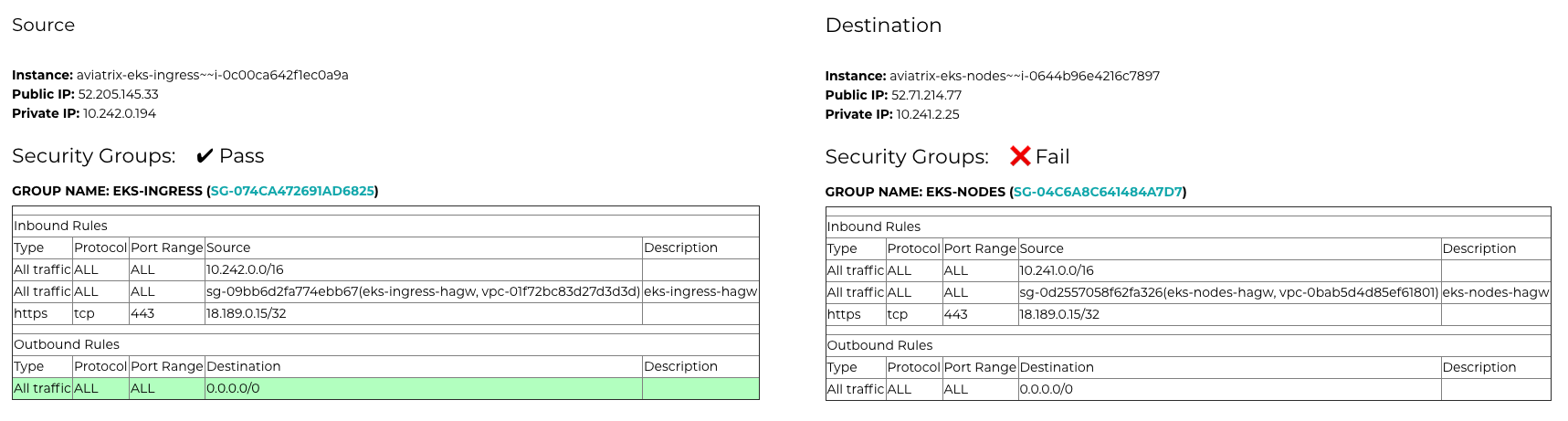

Flight path, an Aviatrix tool, can be used to troubleshoot traffic flows. For example, let’s say I wanted to see if my ingress VPC could reach the EKS cluster. I’d simply select the VPCs as shown below. Flight path will look at NACLs, security groups, and route tables to quickly identify any anomalies in traffic patterns.

The results are quite telling. I can quickly see that there’s only an inbound rule in a security group for a specific IP. I was able to determine this quickly without having to dig into low-level details. I can follow the hyperlink to the security group name and change the source IP address to be the ingress VPC CIDR to allow the ingress VPC to send traffic to EKS pods.

Conclusion

How K8s services will be exposed is one of the many networking considerations involved with the K8s architecture. In this post I highlighted several design patterns that can be used to centralize and secure ingress traffic and discussed the advantages of doing so. I hope this post was informative. If you’d like to learn more, please reach out!

About the author

James Devine is a Principal Solutions Architect at Aviatrix. He joined from AWS, where he was a global AWS Networking SME. He brings a deep knowledge of AWS networking combined with an on-premises knowledge of networking, storage, and compute infrastructures. Prior to AWS, James was a Senior Infrastructure Engineer at MITRE where he helped some of the largest US government agencies build infrastructure and modernize with cloud. He enjoys helping customers solve their toughest networking challenges with eloquent solutions.